Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

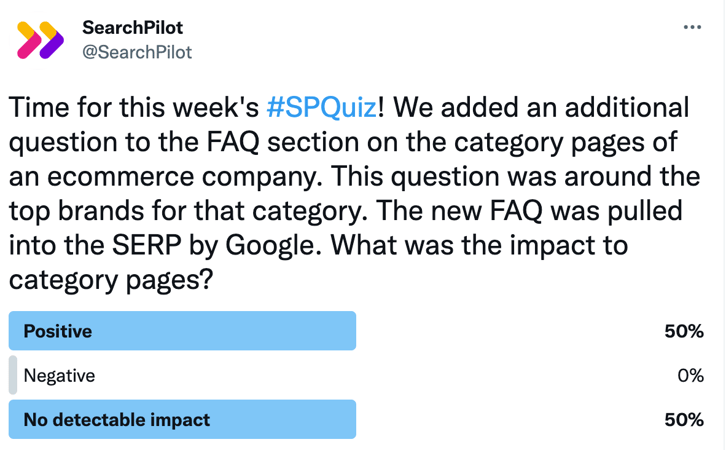

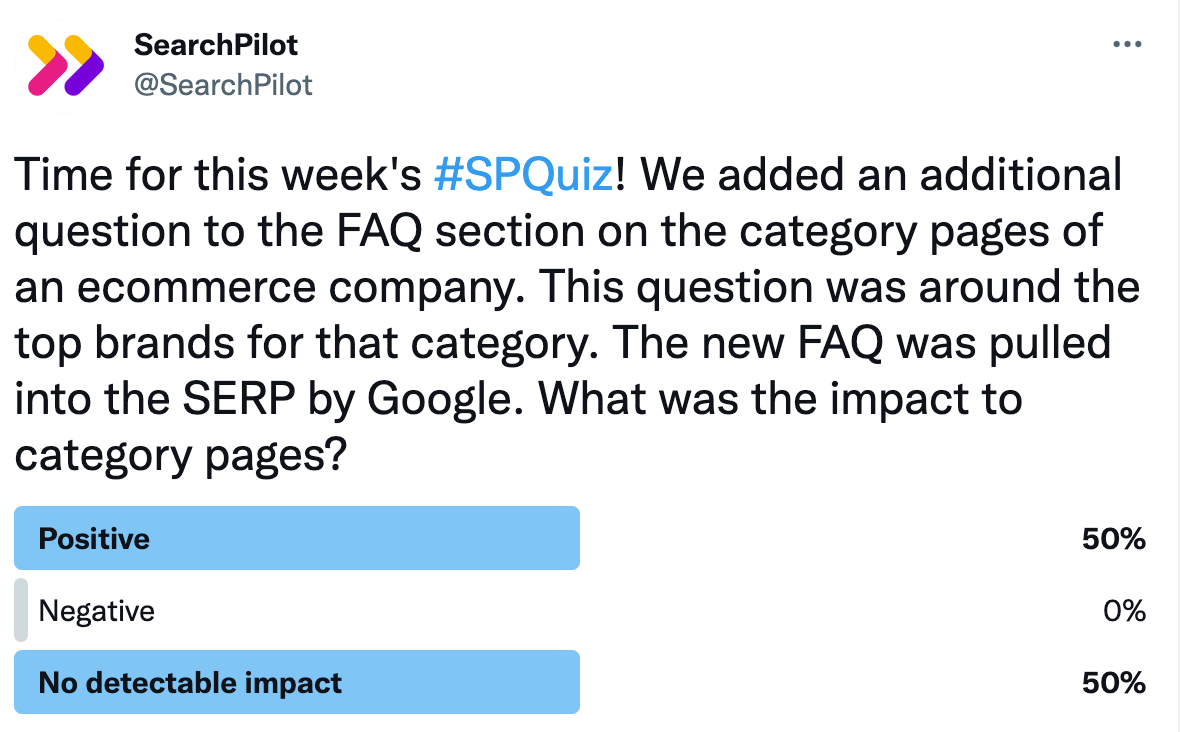

This week’s #SPQuiz, we asked our Twitter followers what they thought the outcome would be on organic traffic when we added an additional question to the FAQ section that links out to the top brands on category pages.

Here is what our followers thought:

The vote was evenly split between positive and no detectable impact - nobody thought this change would be negative. Those who voted that this change would be positive were right!

Read the full case study below.

The Case Study

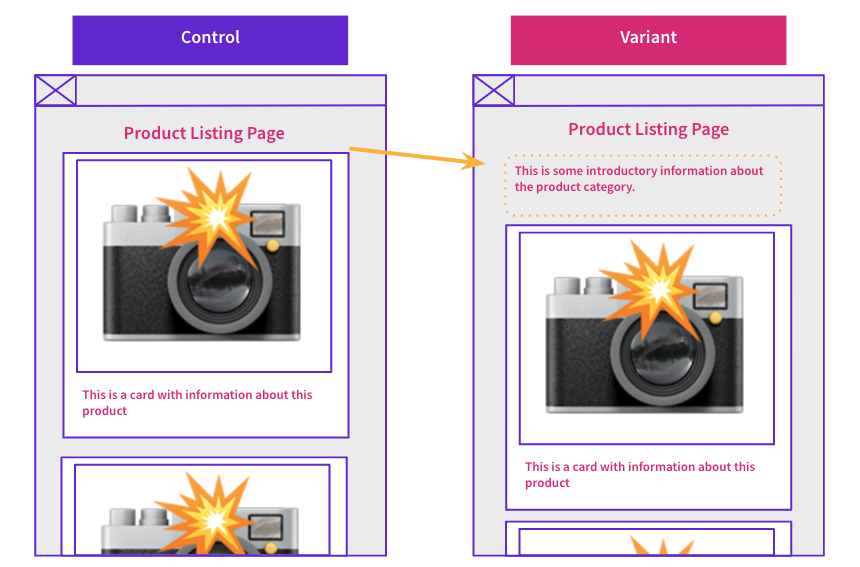

An ecommerce customer knew that their category pages ranked for informational keywords related to the top consumer brands for the specific product categories. They wanted to see if they could improve rankings for those keywords by adding one additional question to their FAQ section that targeted those keywords. They tested this hypothesis by adding a question that listed their top 5 brands from the category in the answer.

In addition to making the change to the FAQ section on the pages, they added this new FAQ to their existing FAQ markup. Although they already had FAQ markup, they were not getting the FAQ snippet awarded in the search results, and they hoped this new question might help them with the snippet.

It was added to the top of the FAQs to increase the chances of this FAQ showing in search results. The customer believed this could give them a boost to organic click-through rates as well as an increase in rankings for brand-related search queries.

Here is an example of the FAQ content that was added:

Control

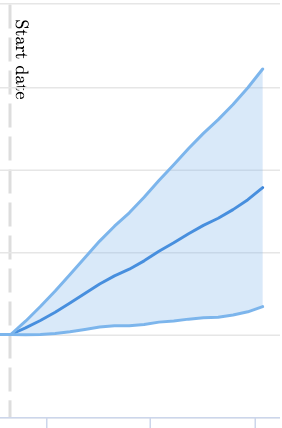

The chart below shows the impact of this test on organic sessions for category pages:

This change resulted in a significantly positive impact on organic traffic, seeing a 9% uplift! The positive impact could have been because the rich snippets appearing in the search results improved click-through rates or that there was a rankings improvement because of the additional brand-related content. For future tests, isolating the changes being tested might provide more clarity on what increased organic traffic.

Google has become more strict with FAQ snippets in search results; it will show them less often and also restricts the number of questions it will offer to two. One of the more interesting insights from this experiment was that changing the FAQs caused the customer to be awarded the snippet in search results.

To receive more insights from our testing, sign up for our case study mailing list, and please feel free to get in touch if you want to learn more about this test or our split-testing platform.

How our SEO split tests work

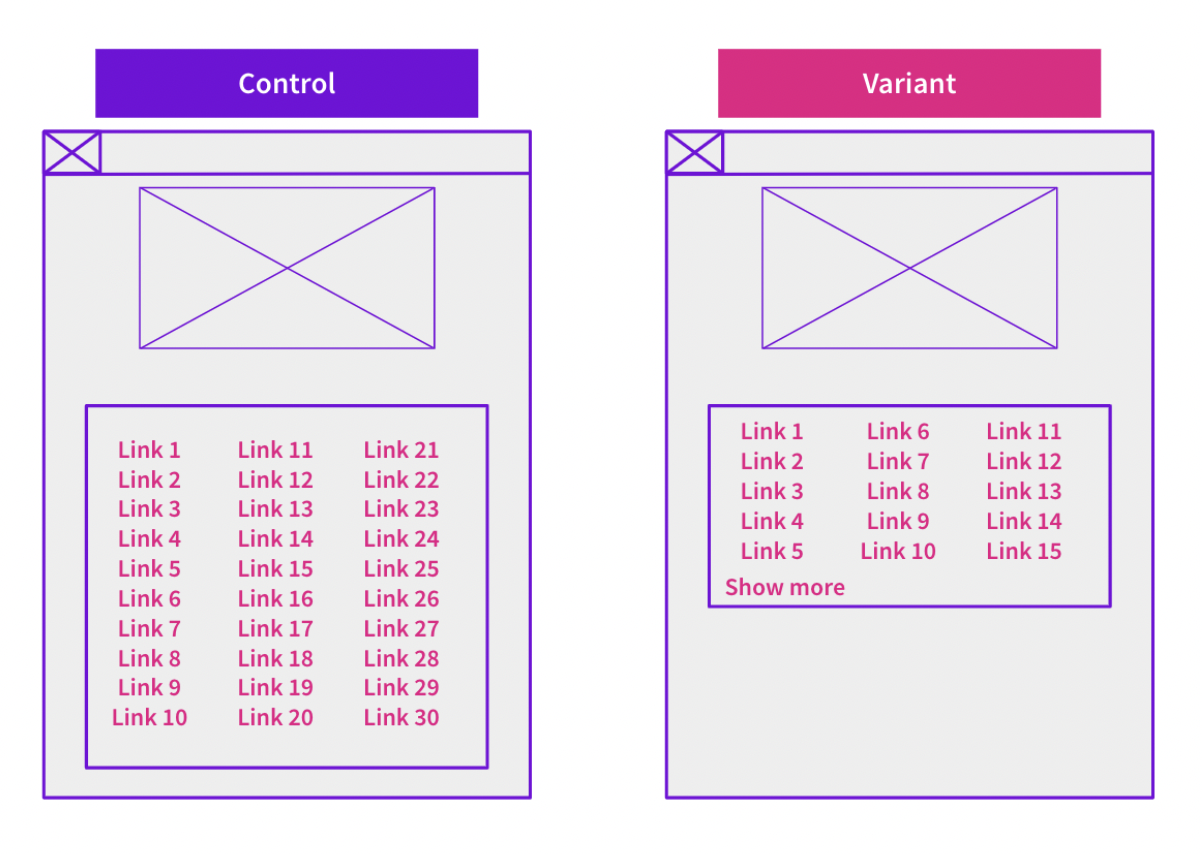

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO A/B testing works or get a demo of the SearchPilot platform.