Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

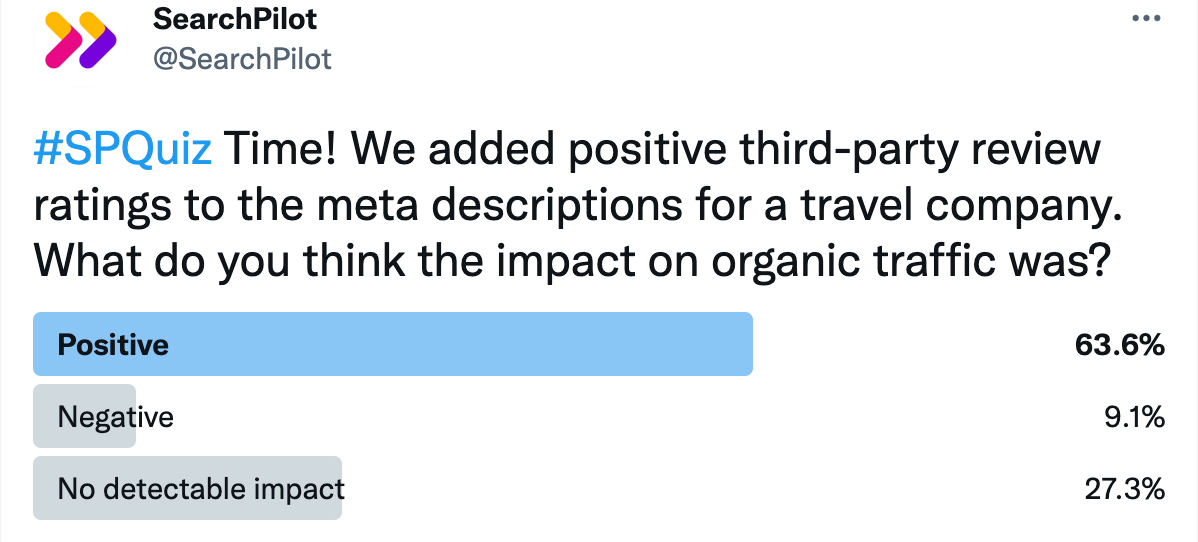

In this week’s #SPQUIZ we asked our followers whether they thought that adding third-party review ratings to meta descriptions would have a positive impact on organic traffic.

Here is what they thought:

Over half of our followers thought that this change would have a positive impact on organic traffic - and they were partially correct. This test was positive, just not at the 95% confidence interval. Read on to see how we interpreted this.

The Case Study

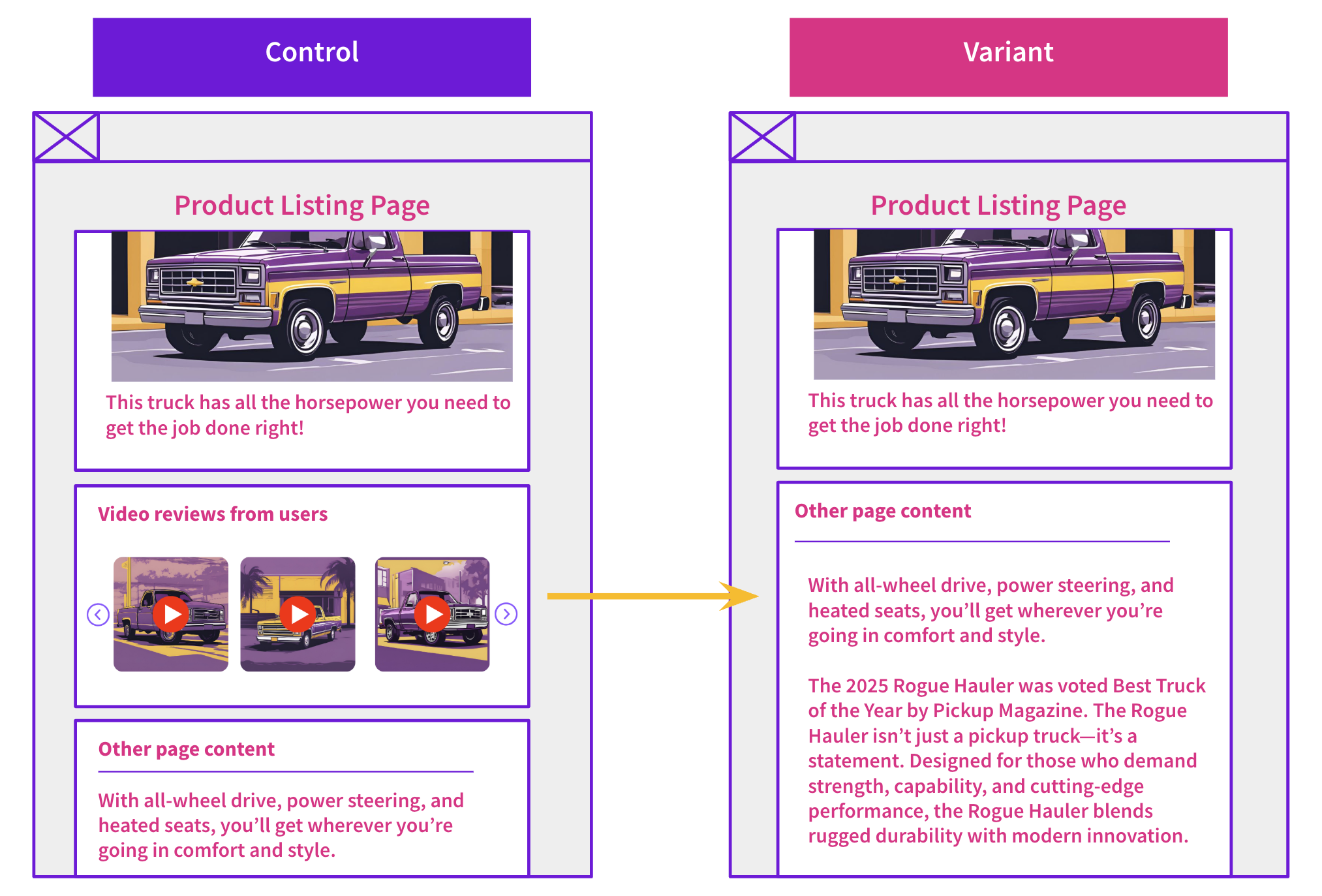

Third-party review platforms allow customers to post reviews about your products and services freely. They also provide code snippets that can be installed on your site to display the ratings to visitors.

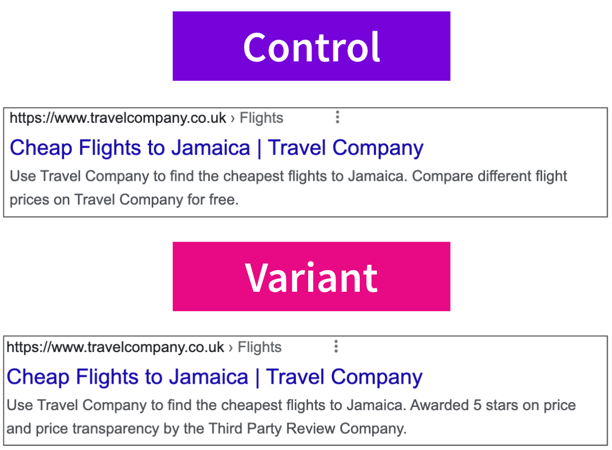

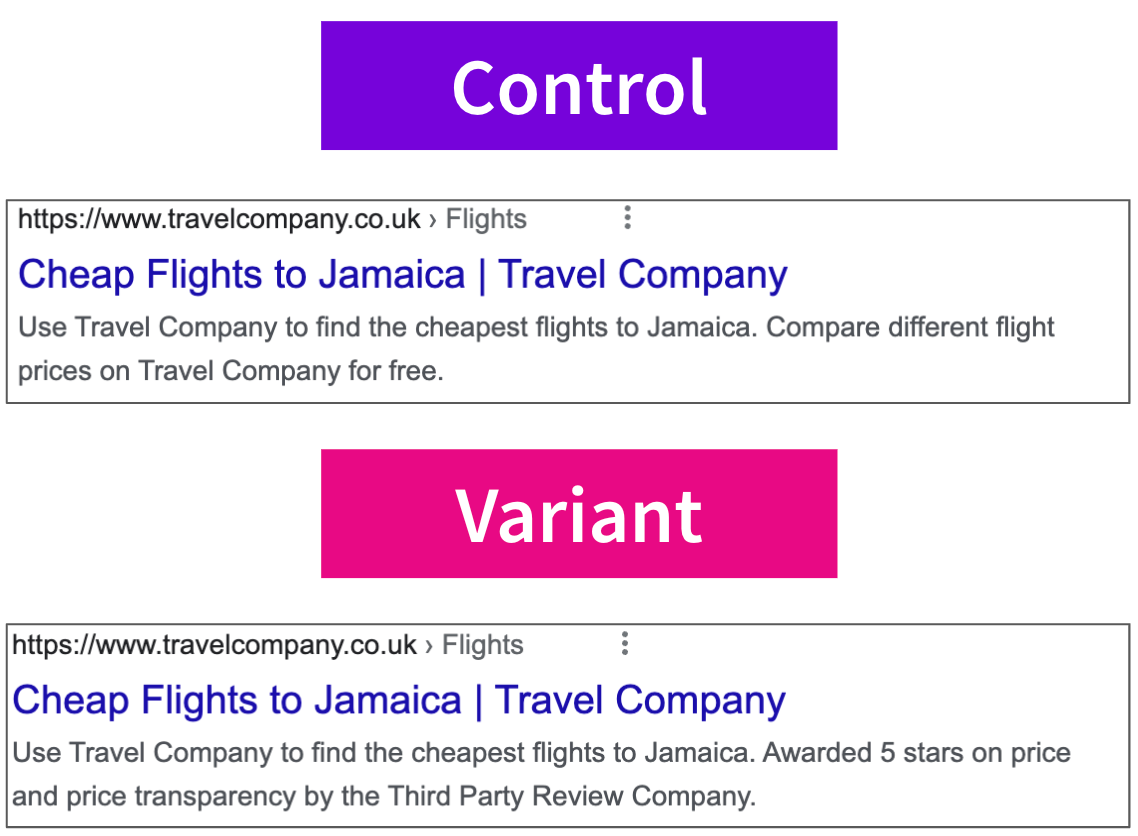

This has led to some SEO testing around injecting third-party reviews onto pages. In hopes to either boost EAT signals to improve rankings, or to increase click-through rates. One of our customers decided to test how this change would impact click-through rates by adding positive ratings from third-party review sites onto the meta description of their flights pages, that didn’t previously include rating information on the page.

We believe this may impact users to click into the page as the meta description for the pages would display the rating in the search engine results page. This would build brand trustworthiness and entice users, leading to an increase in organic traffic and click-through rates.

Our customer tested this by injecting positive price and price transparency ratings to their meta descriptions in the German market.

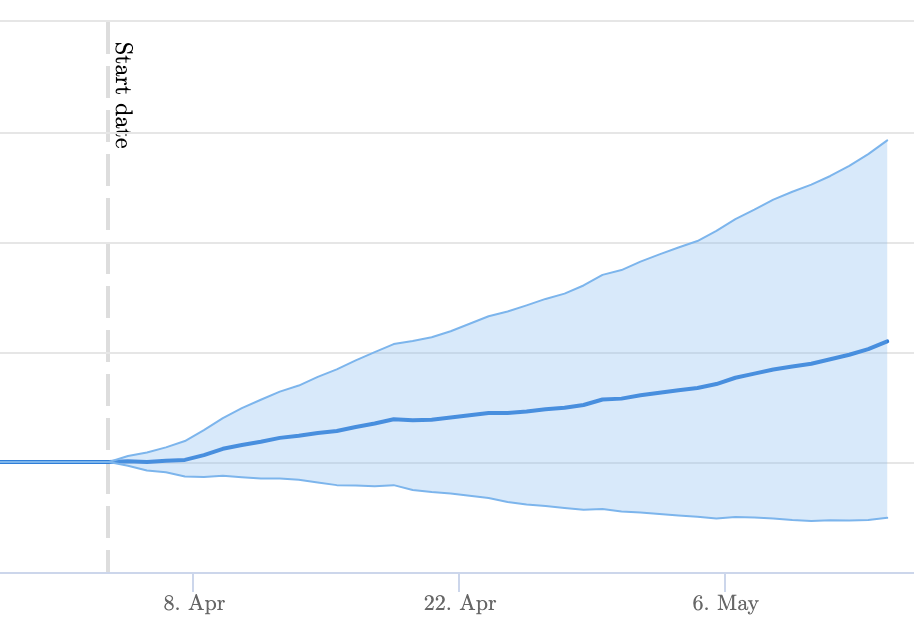

The changes to the meta description were respected by Google, but the result was an inconclusive impact on organic traffic at the 95% confidence interval, but a positive impact at a lower confidence interval. Although the results are not statistically significant, there are many small uplifts that can’t be detected with statistical significance, it’s because of this that in these instances we should potentially default to deploy.

Default to deploy is our internal method of interpreting test results that may have a solid hypothesis, but fail to reach statistical significance. In cases where we are improving our customers' site and its visibility, and few resources are needed for implementation, deploying these changes allows us to take advantage of many small gains. These small gains will compound taking our customers' sites to a position that will be valued more highly by search engines in the future.

These results also provide a case to test many other changes related to third-party reviews. Do visitors engage differently depending on the review site? Does having a third-party review snippet on-page help improve rankings? Would these meta description changes have done better in other markets?

To receive more insights from our testing, sign up for our case study mailing list, and please feel free to get in touch if you want to learn more about this test or our split-testing platform.

How our SEO split tests work

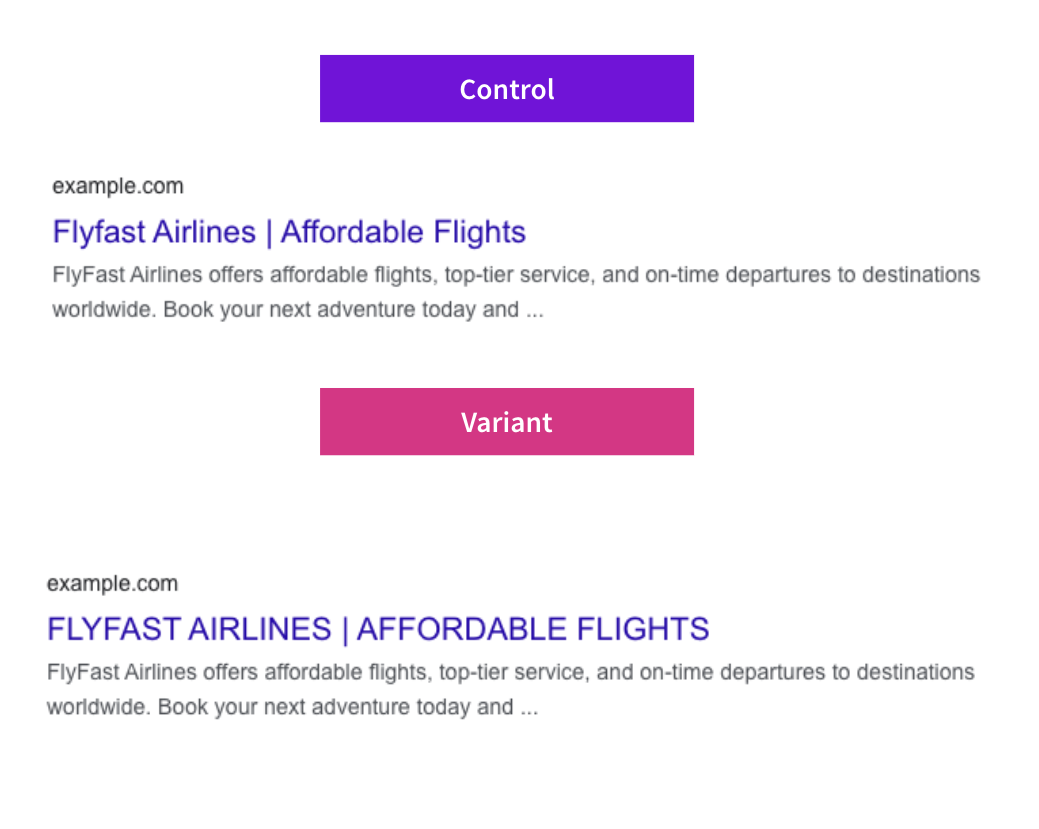

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.