Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

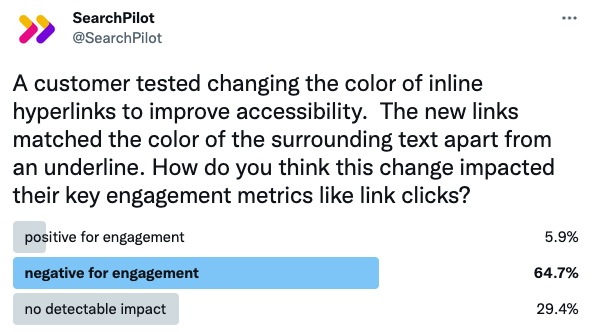

For the first #SPQuiz of the new year, we asked our Twitter followers what they thought happened to engagement when we changed inline hyperlinks on article pages to be a similar color to that of the surrounding text. Here’s what they thought:

Majority of those who voted believe this type of change would result in a negative impact to key engagement metrics, and they were right! Continue to the full case study below for the details on SEO and CRO impact.

The Case Study

One of our customers tested changing the color of inline hyperlinks on article pages from a shade of blue to black, a similar color to the surrounding text. They hypothesized that increasing the color contrast for inline hyperlinks against the background would lead to improved readability and possibly enhance the overall accessibility of the content. When it came to SEO specifically, they hypothesized the impact would be negligible and more concern was placed on how this change could impact engagement metrics on the page. So, we decided to run this as a full funnel test, measuring the impact to these pages’ organic traffic and conversion rates.

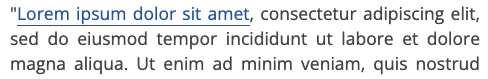

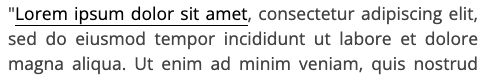

The color contrast of the article pages’ inline hyperlinks was already measured accessible by Google DevTools which are guided by the W3 Web Content Accessibility standards. However, the goal of the test was to increase the color contrast of the links against the background, aiming to also improve readability. On control article pages, the inline hyperlinks were a shade of blue, while the variant page inline hyperlinks were changed to black - both versions of the text were against a white background.

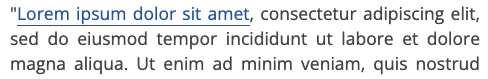

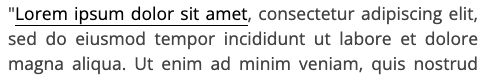

It should be noted that the text surrounding the hyperlinks was a dark grey color, so not quite the same color that the hyperlinks were changed to on the variant pages but very similar. Below are examples of what the hyperlinks looked like on the control and variant pages. In summary, this change increased the color contrast of the links against the background, but reduced the contrast between the links and the surrounding text.

| Control | Variant |

|---|---|

|

|

| Control |

|---|

|

| Variant |

|

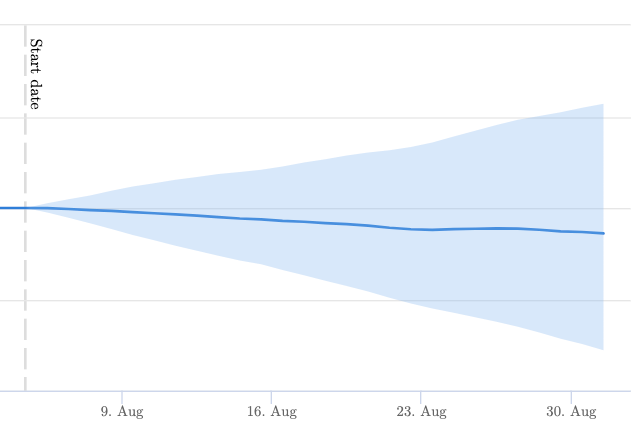

After running this test, here’s how SEO performance played out.

Unsurprisingly, this change had no detectable impact on these pages’ organic traffic.

However, since we ran this experiment as a full funnel test, we also analyzed key engagement metrics for these pages. Turns out, changing the color of inline hyperlinks in this way resulted in a negative impact on the majority of the key conversion rates measured, including engagement with the links as well as average time on page.

Given that the variant hyperlink text was a similar color to the surrounding text, it was probably more difficult for users to easily distinguish links within the article. So while the color contrast increased between links and the background on variant pages (and possibly improved readability), it could have also detracted from the overall user experience, as noted by the impact on key conversion rate metrics.

With the page experience update emphasizing better overall user experience on sites, it’s interesting to note that with this test, even though the key metrics were for the most part negatively impacted, the SEO performance was not directly impacted.

To find out more about full funnel testing, feel free to reach out to us for a demo of the SearchPilot platform.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.