Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

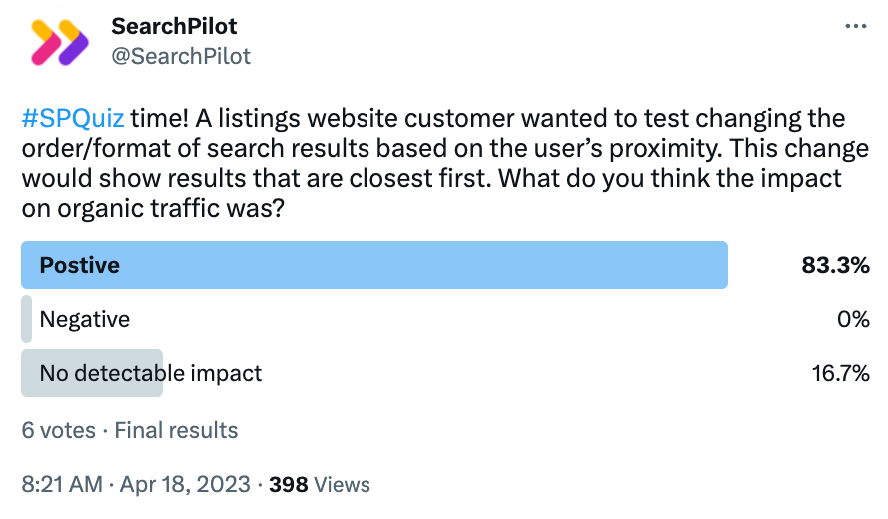

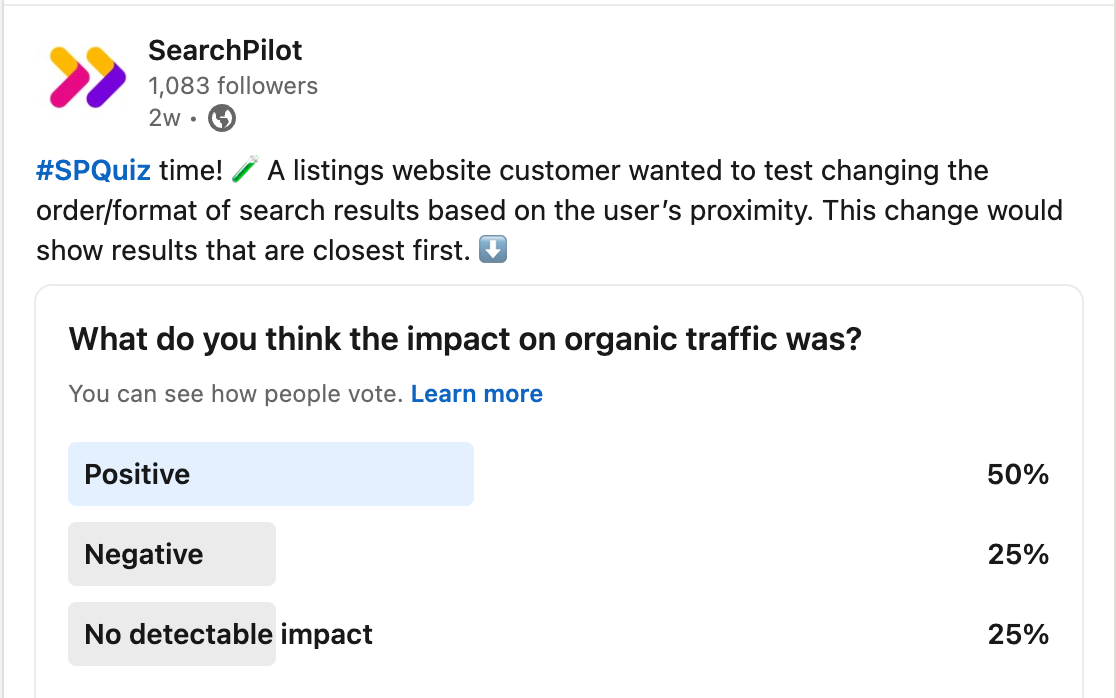

In this week’s #SPQUIZ case study, we asked our followers whether they thought that we could improve organic traffic by removing ad listings that were not in the same location as the page. How did this change impact SEO performance?

Here is what our followers thought:

Twitter:

LinkedIn:

The Case Study

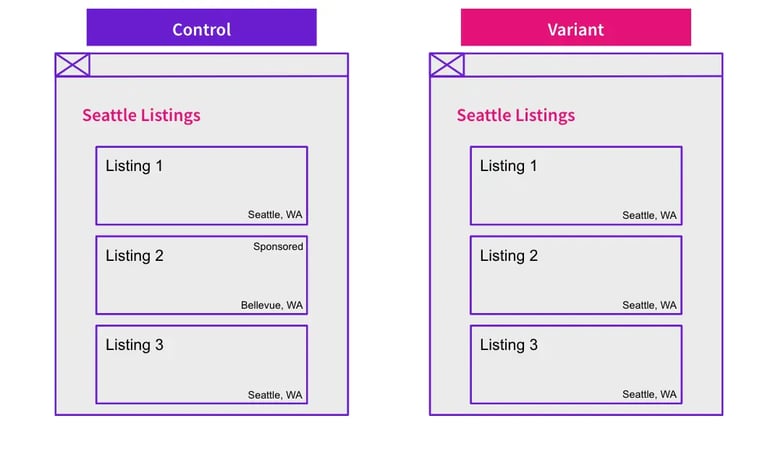

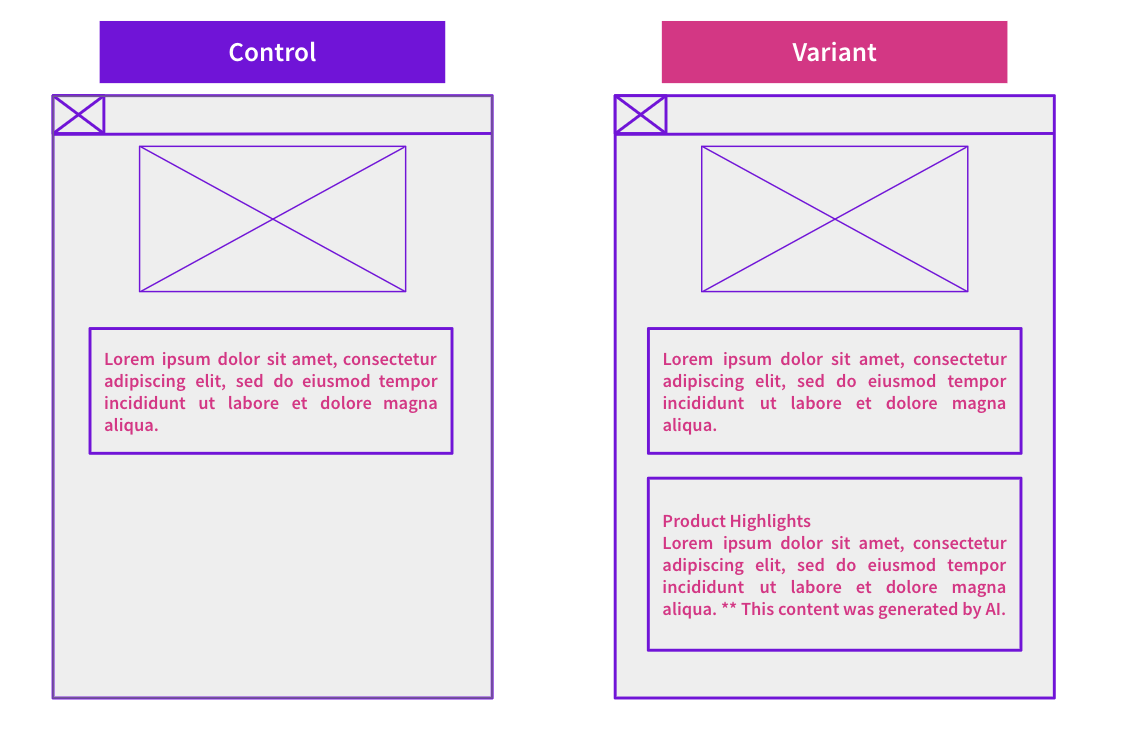

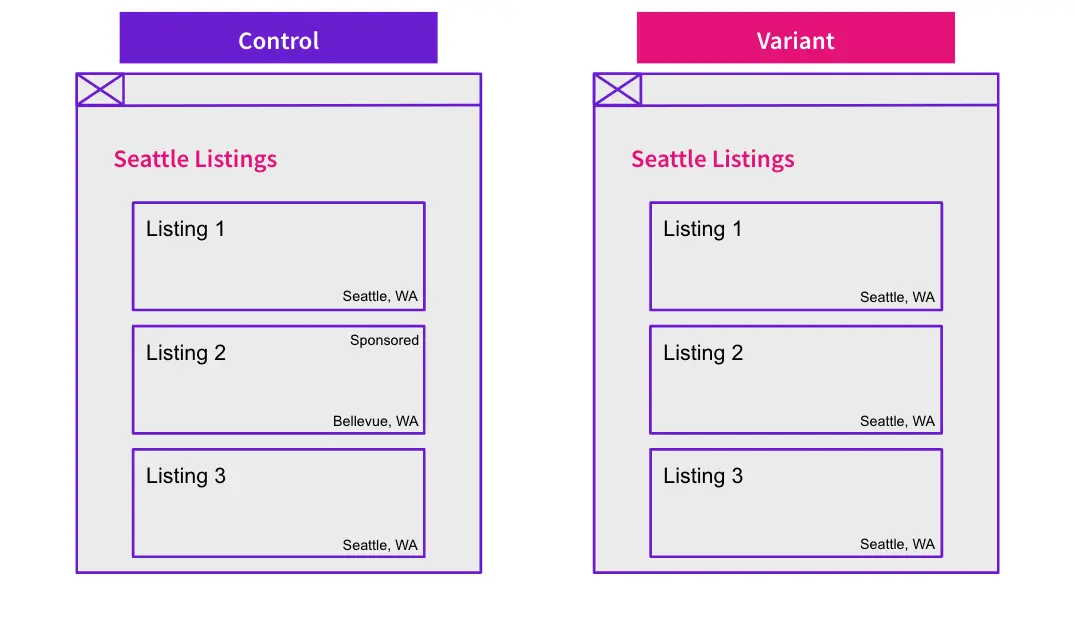

Matching page content to search intent is a core principle of SEO which is why we ran a test to determine how well our customer was performing in this area. In this case study we are looking at a test by one of our customers with a listings site, testing whether the removal of ad listings outside the location of the page better matched search intent. For example, a page such as ‘listings in Seattle’ had the ads removed that were for listings in Bellevue and other neighbouring cities.

We tested this by using request modification to add a request header to variant pages which directed the host server to return the new, adless listings pages in place of the existing version.

The hypothesis was that these ad listings were not in line with the search intent of users which lead to higher bounce rates. We expected that there would be one main lever that could cause this change to increase organic traffic, improved rankings. There was the possibility that users would find that the content on these listings pages would better align with what they had initially searched for, leading to lower bounce rates and thus better user signals.

This is how the pages differed between control and variant:

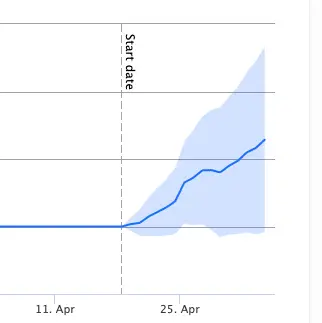

Here’s what the test result shows:

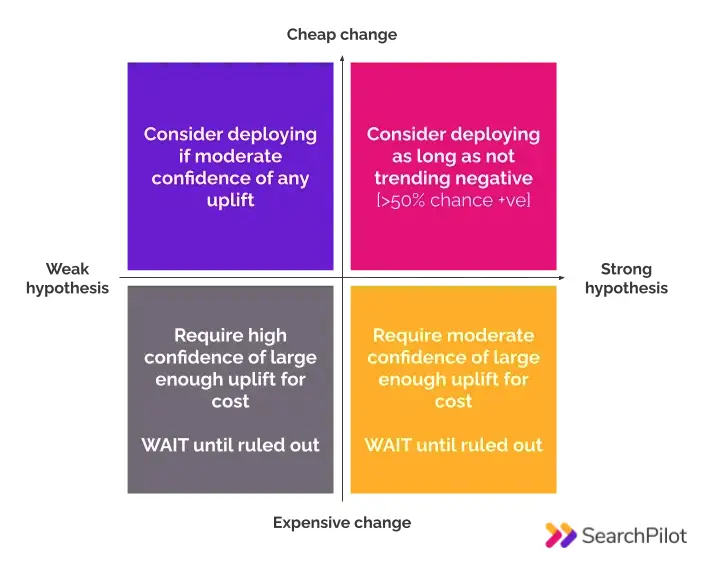

This test ended up with an inconclusive outcome, with no detectable impact on organic traffic after running for 16 days. You may be looking at the graph and thinking that it looks pretty positive, but even at the lower 90% confidence interval the test was still inconclusive. Now at this point it’s good to remember that we are doing business not science so we needed to compare the strength of our hypothesis and the cost of implementing the change to inform our decision on whether to deploy or not.

While we felt that the hypothesis was decent, the cost of lost revenue in removing the ad placements outweighed the potential SEO benefit of the page. This puts us right in-between the grey and the yellow quadrant so the customer decided to not deploy the changes permanently.

To receive more insights from our testing, sign up for our case study mailing list, and please feel free to get in touch if you want to learn more about this test or our split-testing platform.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.