Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

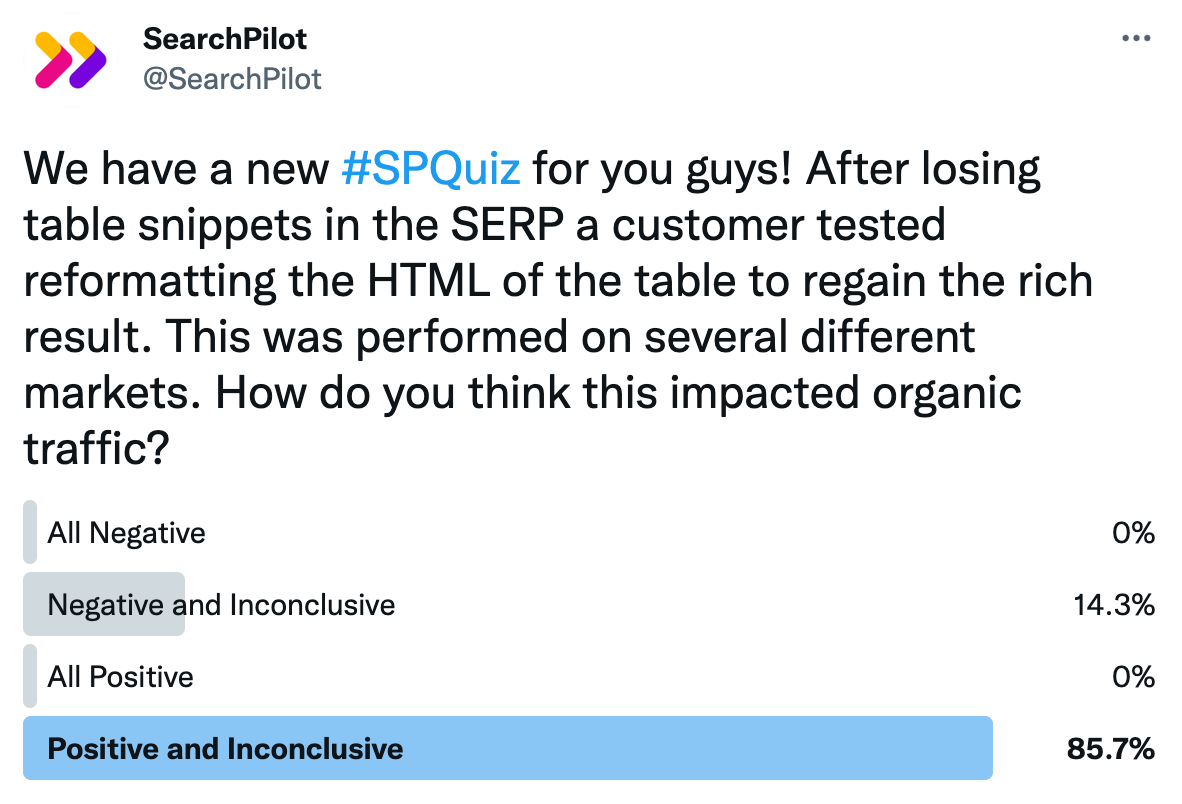

For this week’s #SPQuiz, we asked our Twitter followers what they thought happened when a customer tested reformatting their HTML table to regain table snippets in the SERP that they previously owned. This test was run across multiple domains. Here’s what they thought:

There was a strong consensus this week, with over 80% of our followers thinking there would be a mix of positive and inconclusive results. Nobody thought the results would be the same across every domain.

The majority was correct! This test was positive on two of the markets we tested. On the other two there was no detectable impact. However, the customer successfully won back the snippet on all four domains. Read the case study below to learn more.

The Case Study

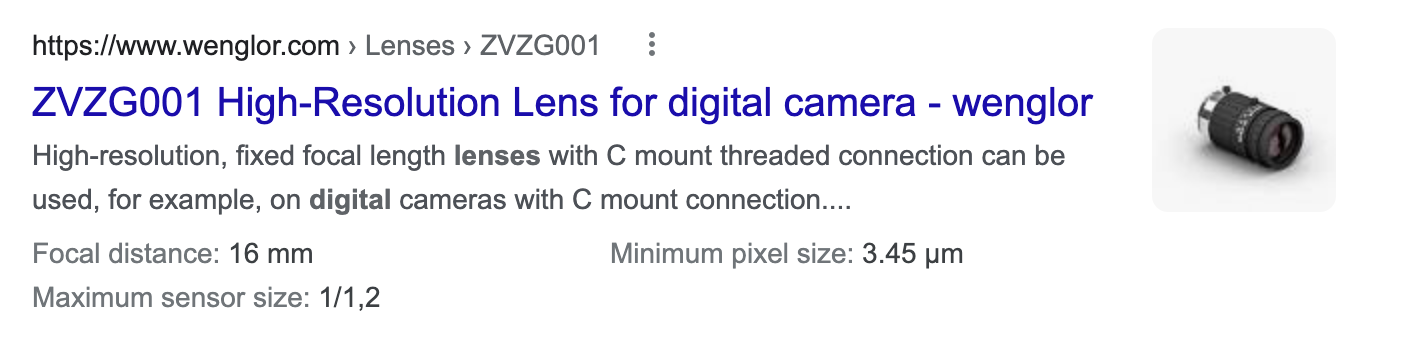

Rich snippets often help to differentiate listings within the Google search results page and drive clicks to specific pages over competitor listings. Information presented in the form of rich snippets can also provide an additional summary of the content found on the page. One example of a rich snippet is when contents laid out in an HTML table are pulled into the search engine results page (SERP) listing, like this example:

A customer has an HTML table on their page with important product information that previously was being pulled into the SERP as a rich snippet. After some time, Google stopped awarding them the snippet despite them not making any changes to their HTML. Their competitors were also still getting the rich snippet for queries they both ranked for. The customer has multiple domains across different markets; they lost the snippet across all of their domains / markets.

For our customers with multiple domains, we will often initially run experiments on lower traffic domains as a proof of concept. This strategy has a couple primary advantages. For one, it enables us to run riskier tests on less valuable markets first; positive results here give us reason to take the same risk on higher traffic markets.

Second, we can run iterations of experiments without blocking the big money-making domains from testing. That was the reason that our customer began first testing different changes to the HTML table to try and regain the rich snippet on a lower traffic domain.

The customer’s primary goal was to first discover how they could edit their HTML to regain the table rich snippet without slowing their testing cadence. Once they found a successful change, the plan was to test it on other markets - unless this result was negative, if the change won them the rich snippet they intended to deploy it across all markets.

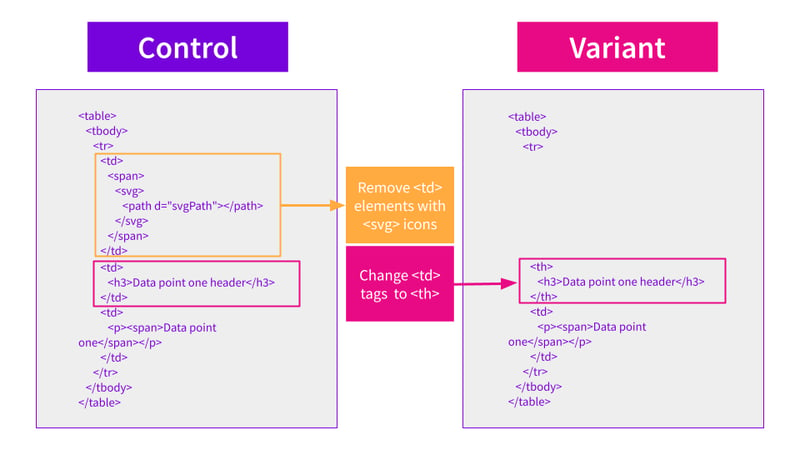

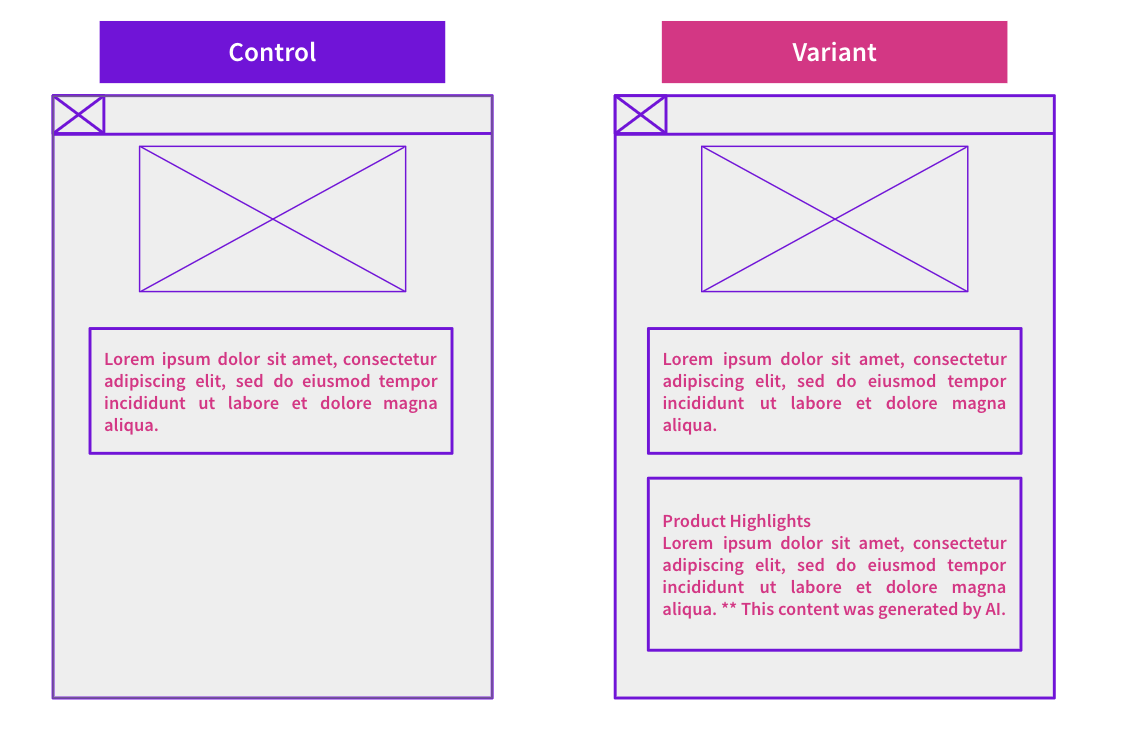

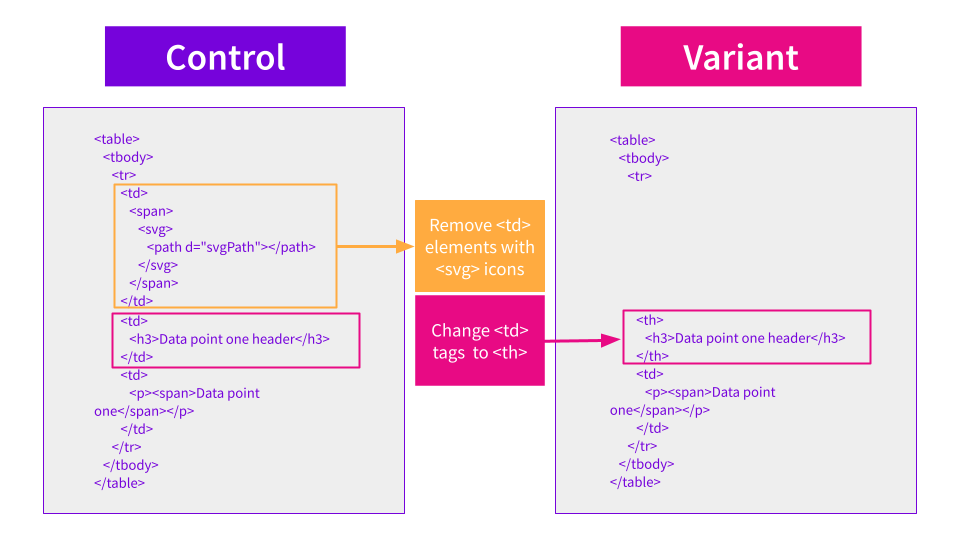

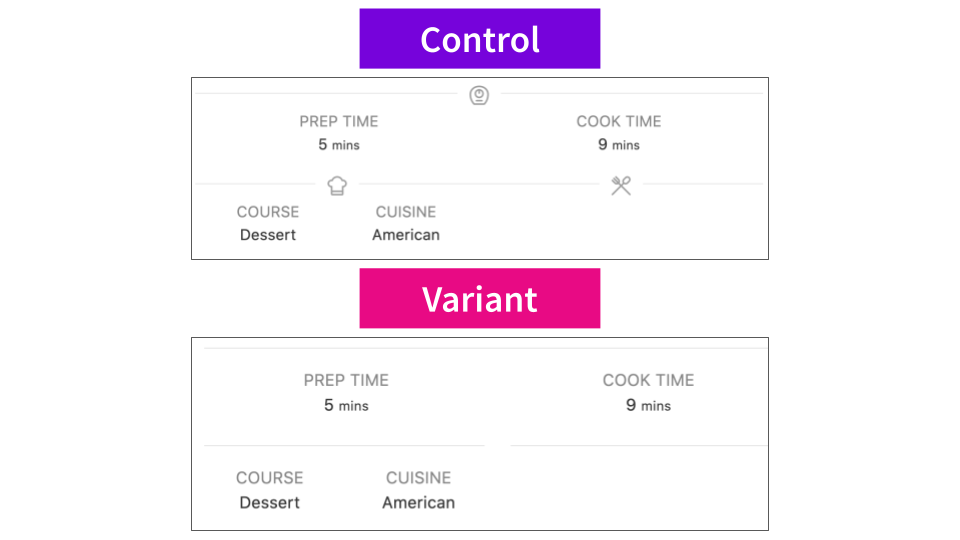

The first iteration they tried was editing the table component markup. Not only was this test inconclusive for organic traffic, but they were also unable to win back the snippet. They followed this experiment with a second iteration, where they reformatted the table to use <th> tags instead of <td> tags for the headers and removed svg icons that were contained within the <td> tags. The data was left in a <td> tag.

See the changes below and how they appeared in the HTML and on the page:

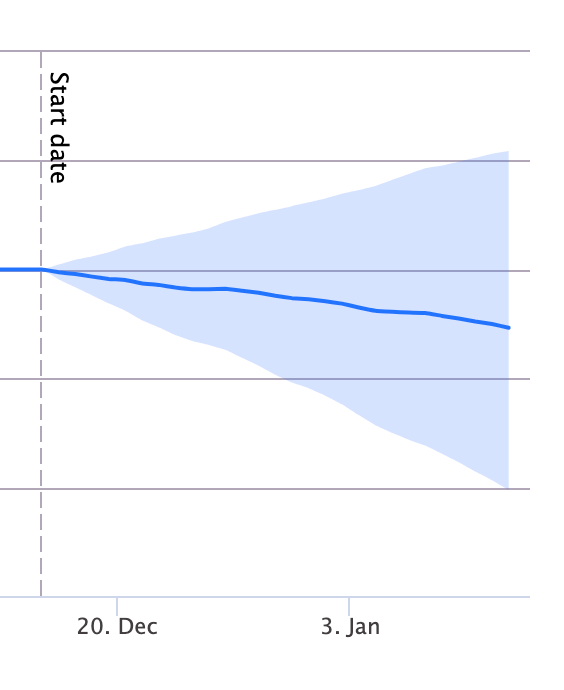

This iteration was successful in regaining the snippet in the SERP, but was inconclusive for organic traffic; however, that does not necessarily mean that there was no uplift here. The first test was run on a lower traffic domain. Our tools showed that at the 95% confidence level we wouldn’t have been able to detect anything less than a 9% uplift on this level of traffic, and this test didn’t have as big an impact as that.

Our customer then launched the same change as a test on three other markets, including their largest market. See the results below for how this test played out across different markets:

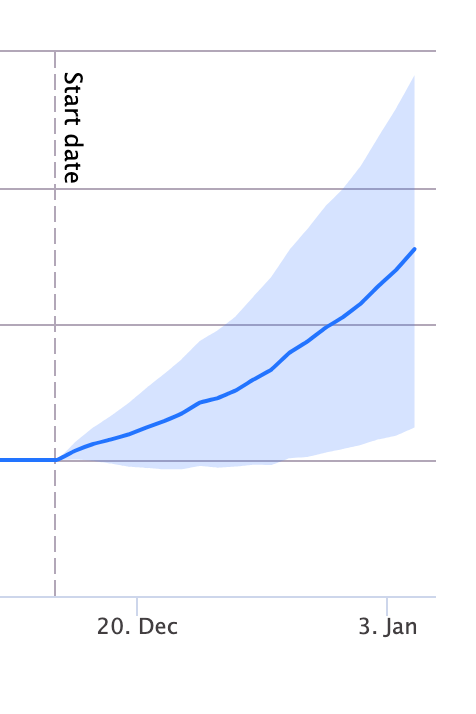

United Kingdom

This test yielded an estimated +3% uplift to the UK market’s organic traffic.

This test yielded an estimated +3% uplift to the UK market’s organic traffic.

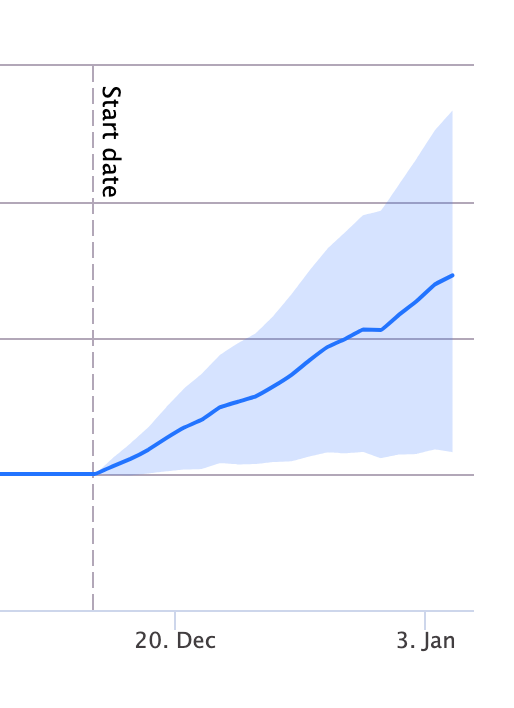

Spain

This test resulted in an estimated +7% uplift to the Spain market’s organic traffic.

This test resulted in an estimated +7% uplift to the Spain market’s organic traffic.

Australia

There was no detectable impact to organic traffic in Australia.

There was no detectable impact to organic traffic in Australia.

On two markets, United Kingdom and Spain, this test resulted in a statistically significant and positive impact to organic traffic, yielding an estimated uplift of +3% and +7% respectively.

The other market, Australia, showed no detectable impact to organic traffic, although they did regain the rich result there too.

Winning additional organic traffic confirmed their hypothesis that this rich snippet was important for their organic traffic, and it also allowed them to make a strong business case for getting this change implemented.

SEO testing not only helps our customers discover ways they can improve their organic traffic, it can also help prove the impact of SEO changes to help prioritise them in the development queue. This series of tests demonstrates how SEO testing can provide value for your company beyond just winning you more organic traffic.

Following this series of tests, they deployed the change not only to the four markets where we ran the experiment, but across all of their domains – meaning the true impact of the change was likely higher.

This series is another example of how test results can vary across different markets, but it also shows how companies can capitalise on their lower traffic domains to achieve wins in more valuable markets. With SearchPilot, they were able to iterate quickly and efficiently on their first test to implement several similar tests to find the approach that worked and ultimately to help drive more organic traffic to larger markets.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.