Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

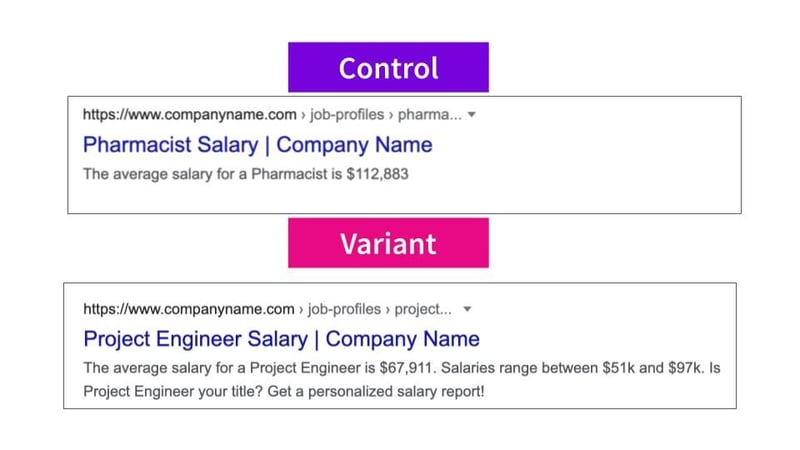

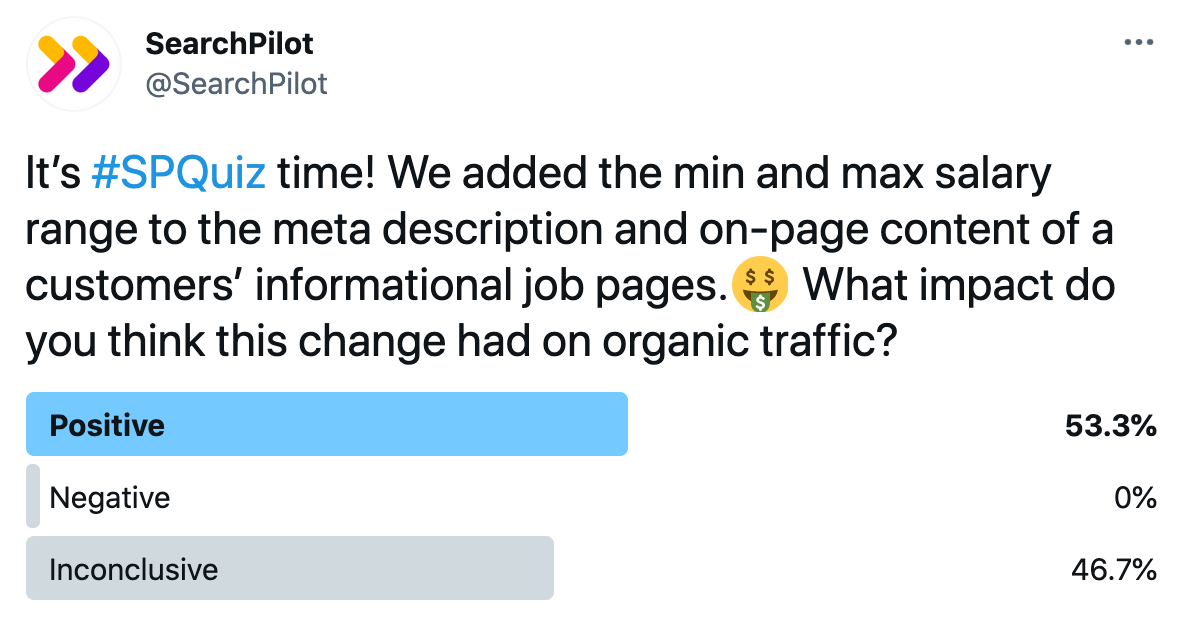

In the latest edition of #SPQuiz, we asked our followers what they thought the SEO impact was of adding the min-max salary range to the meta descriptions of a customers' informational job pages.

The votes were nearly split between positive and inconclusive, with the majority voting positive. None of our followers believed this change would have a negative impact - which means that all of you will be surprised to find out that this change was actually negative! Read on for the full case study:

The Case Study

Google’s intermittent refusal to respect custom meta descriptions has added a new complexity for SEOs trying to write them; not only do we have to try and write well optimised meta descriptions for organic search users, we also have to either force Google to respect them with data nosnippet tags, or manage to come up with one Google opts to show in the Search Engine Results Page (SERP).

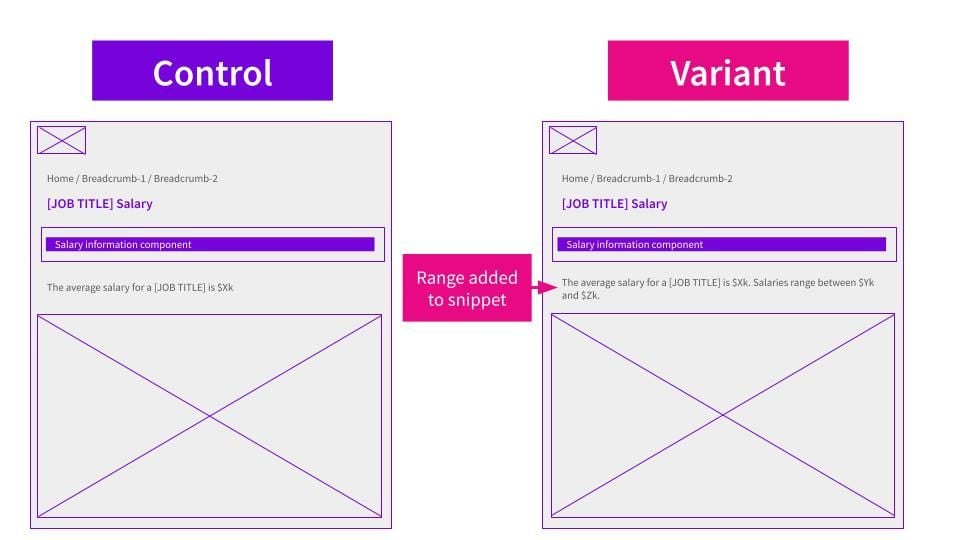

The existing meta descriptions on one of our customers’ informational job pages were not being displayed in the SERP by Google in every instance we had seen. Instead, a line of text reading “The average salary for a [JOB TITLE] is $Xk” that our customer had on-page above the fold was being scraped by Google for the meta description.

We wanted to test adding the min-max salary range in the meta descriptions that Google was scraping to see if we could get Google to include our custom meta descriptions again and to see if having the range improved our organic click-through-rates.

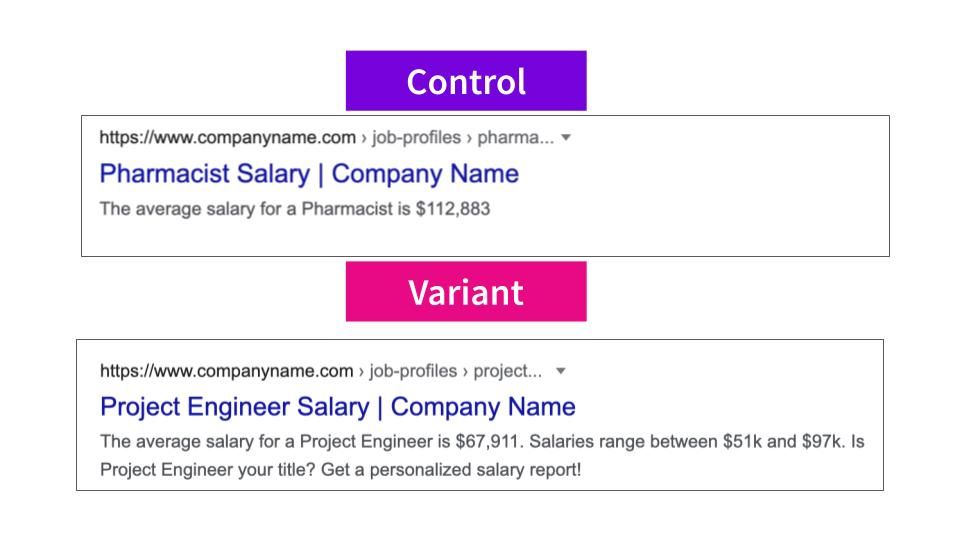

For this experiment, we changed the meta description to “The average salary for a [JOB TITLE] is $Xk. Salaries range between $Yk and $Zk. Is [JOB TITLE] your title? Get a personalized salary report!”.

We also added “Salaries range between $Yk and $Zk.” as a second sentence to the snippet above the fold, the idea being that updating the line Google was already scraping would make it more likely for it to appear in the SERP.

Examples of both the on-page change and change on the SERP are below:

Once launched, we did some discovery to find out if the new meta description was showing in the SERP or not. We found it was inconsistent - sometimes Google opted to show the new meta description, other times it continued to still only show the average salary text.

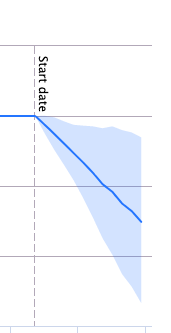

This was the impact on organic traffic:

This test was significantly negative, leading to an estimated 7% decline to organic traffic. This test was surprisingly negative considering that it seemed to be a relatively low impact change and the new meta description was not showing in the SERP universally.

We analysed the SERP to theorize why the change may have resulted in such a negative impact. We found in many examples that competitors did not cite the salary range in the meta description (or at least it did not appear in the SERP) and oftentimes the bottom end of our range was lower than competitors. It’s possible that users were less inclined to click through to the result when seeing the lower end of a range, especially when compared to competitors. Users may be averse to seeing low numbers, even if it is part of a range.

We believe this theory was more likely to have contributed to the negative result than the additional sentence, “Is [JOB TITLE] your title? Get a personalized salary report!”, that we also made to the meta description.

The fact that the meta descriptions were not always being respected by Google made it more challenging to unpick the results without being able to assess at scale when the change was being respected or not.

However, it would seem that the impact to organic traffic on the pages where the variant meta description was respected was a significant enough negative impact to make the result negative overall. It’s also possible that adding the additional sentence to our line of copy above the fold contributed to the negative test result, although we believe it was more likely due to the change on the SERP.

This test goes to show just how price sensitive users can be on the SERP, and also serves as a reminder that meta description changes can still negatively impact organic traffic. In this case, even when our updated meta descriptions only appeared intermittently the impact to organic traffic was still substantial, so they are definitely still an important optimisation to test!

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.