It’s really common for companies that are pushing towards having a more data-aware culture to start asking themselves questions like:

Is there a right answer for when to test and when not to?

Often, we see tension between teams where one team becomes keen to test everything and use lack of test-based evidence or lack of statistical significance as a weapon or a shield for saying no to things, and another team leans more towards “we know this particular initiative is great, we don’t need to test it”.

What’s the right way of thinking about this question? I know it’s not unique to SEO testing - it comes up wherever testing is possible, from product to conversion rate - but in my opinion the frameworks for thinking it through in your organisation’s context are similar for all these areas: I published a public version of an internal memo I wrote for SearchPilot entitled ‘we are doing business, not science’ that has some overlap with this question.

There, I was talking more about addressing the oft-heard testing philosophy that you can only deploy statistically-significant winners of controlled experiments - and should roll back inconclusive tests which don’t confidently show a benefit. This is similar to the question at hand.

I hope there’s something useful in that post - but the overarching way I recommend thinking about this question is to remember why we are testing - namely to do better as a business. With that in mind, the way I’d apply the “business, not science” philosophy to the original question here is something like:

We should test to the extent that testing enables us to move faster in the direction we want to go.

In practice, how do you decide when to test something?

What does all this mean in practice? I think it means:

-

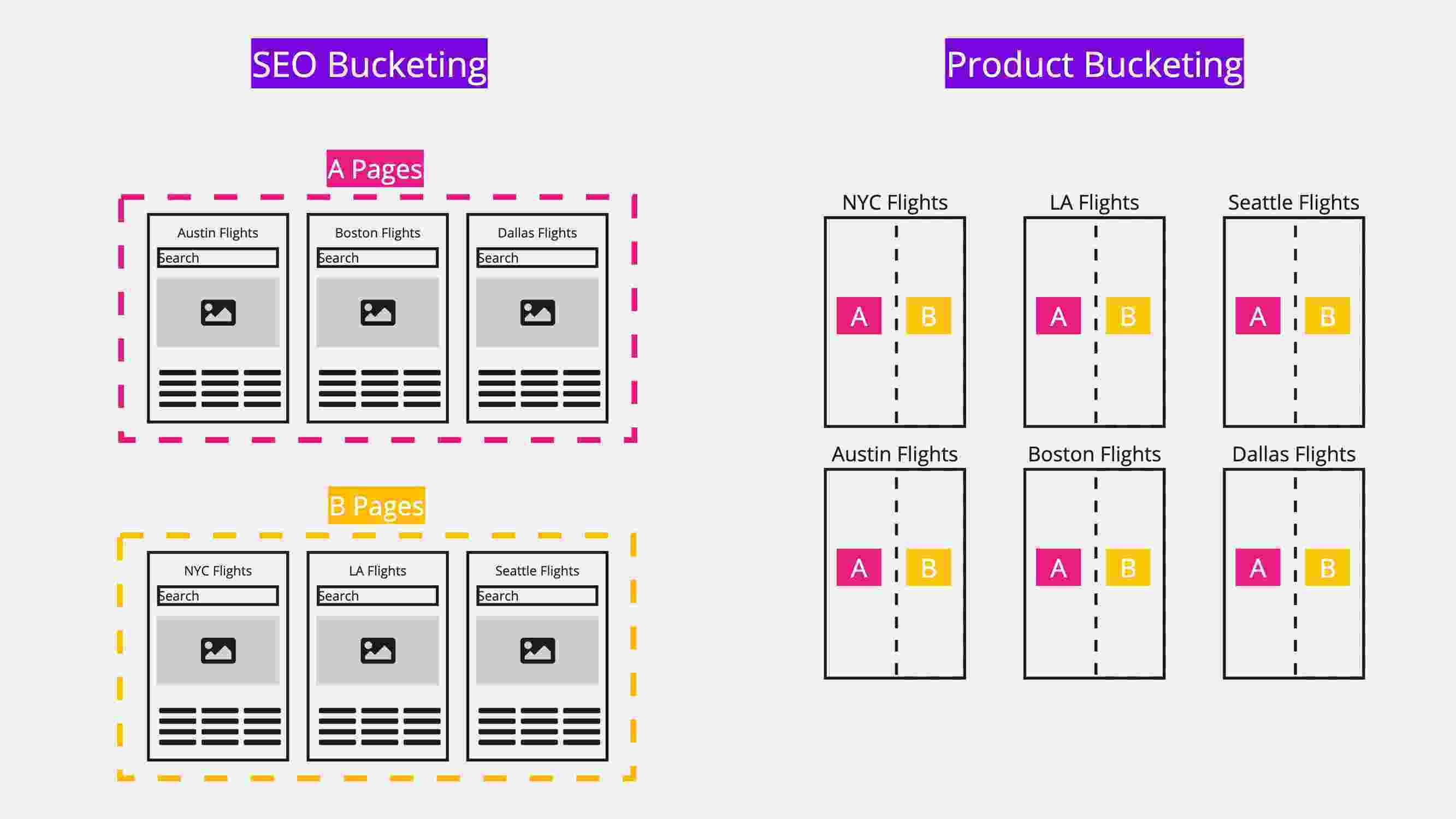

Test things that are likely to have large and unknowable outcomes (note that these could be very good or very bad) - this works because it means that we can safely roll out a greater number of winners while never rolling out the losers [title tag testing is like this, in SEO]

-

Test things that have a maintenance cost even if they have no likely downside - because we only want to roll these out if the benefit outweighs the cost and testing is the best way of understanding the size of that benefit in an uncertain world [structured markup is like this, in SEO]

-

Test things that people are arguing over - if you can go faster by just testing to find out the answer rather than having endless meetings debating different people’s opinion, then speed-to-confidence is a valuable outcome

What can you “just do” without testing?

You can safely “just do” something if it meets three key criteria:

- It is unlikely to have a downside

- It has little maintenance cost

- There is widespread acceptance in its value

Things like speed improvements may fall into this category. The main reason for testing these is if you want to be able to say, after the fact, “this work made us this much money” - this can be valuable in making the case for doing more similar work (even though it doesn’t guarantee that the subsequent work will pay off, it hints that it might).

Whether to test the roll out of these kinds of changes is more of a culture than a business decision IMO - depending on how decisions are made and reviewed, how budget is allocated, and how much evidence you are likely to need after the fact that it did meet the intended criteria.

You may not need to test anything with large and certain upside

If you find any of these, let me know!

I’m very interested to hear others’ perspectives and opinions on all of this - come and discuss it with me on twitter where I’m @willcritchlow.