Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

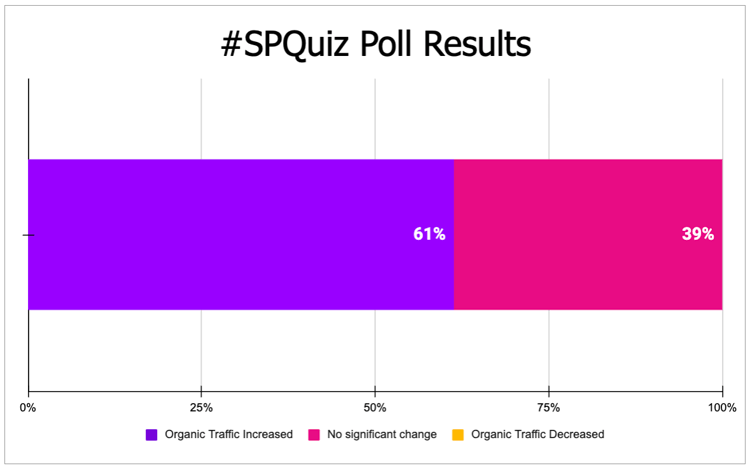

In this week’s #SPQuiz, we asked our followers on LinkedIn and X/Twitter what they thought the impact was on organic traffic when an ecommerce customer added the numerical product ratings next to the star ratings on PDPs.

Poll Results

Most of our followers believed that adding the numerical rating on PDPs would increase traffic, while almost 40% think it would have no effect whatsoever.

The Case Study

Product Detail Pages (PDPs) are essential for ecommerce SEO. They often act as the final destination for users before they convert, and are a common landing page from organic search results. Small design or content adjustments on PDPs can influence both user engagement and search performance.

We aimed to see whether adding a numerical product rating next to the star rating on PDPs would impact organic performance. The goal was to clarify information for users, highlight the presence of product reviews, and potentially boost click-through rates and engagement signals that search engines may consider for relevance. Additionally, the numerical rating was directly linked to the reviews section of the page, offering users a smoother navigation experience and a quicker way to verify their purchase decisions.

What Was Changed

We added the numerical product rating next to the stars on PDP pages; for example, "4.7" was displayed alongside the stars. The numerical product rating was also linked to the reviews section of the page.

Results

This test was inconclusive at the 95% credible interval; therefore, we cannot say with clarity whether the change had a meaningful effect on organic traffic. This outcome indicates that, although the change did not cause harm, it also did not consistently lead to measurable improvements in SEO.

One possible reason for the result is the subtlety of the test. Adding a numerical value next to the star rating, along with a shortcut to the reviews section, primarily improves the user experience. However, it doesn't alter the page content in a way that search engines would consider more valuable for ranking, unlike more significant on-page changes, such as adding structured data, richer content, or updates to keyword relevance.

This test highlights an important lesson for PDP optimization: not every user-facing improvement will directly influence organic rankings. However, the neutral result is valuable because it shows that small changes like this can be made safely without harming performance. These adjustments can still lead to meaningful increases in engagement or conversion further down the funnel, emphasizing the importance of full-funnel testing to understand the broader impact.

Continuous SEO testing like this helps prevent wasted effort, reduces risk, and ensures that optimizations deliver measurable value - because without search visibility testing, you're flying blind. The "win" is knowing the impact, regardless of whether it's positive, inconclusive, or even negative.

To receive more insights from our testing, sign up for our case study mailing list, and please feel free to get in touch if you want to learn more about this test or our split testing platform more generally.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.