Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

It’s been another year of comprehensive and insightful testing! Across some of the world's biggest websites, we tested almost every conceivable change to see its impact on search visibility and, in some cases, CRO as well.

In partnership with our customers, we challenged conventional SEO wisdom, turned some assumptions on their head, and delivered data-driven optimization. In each case, we didn’t just guess; we tested. And sometimes the results surprised us as much as you, our avid readers. Below are five of the most interesting tests we’ve run this year.

5 of the most interesting tests we ran this year

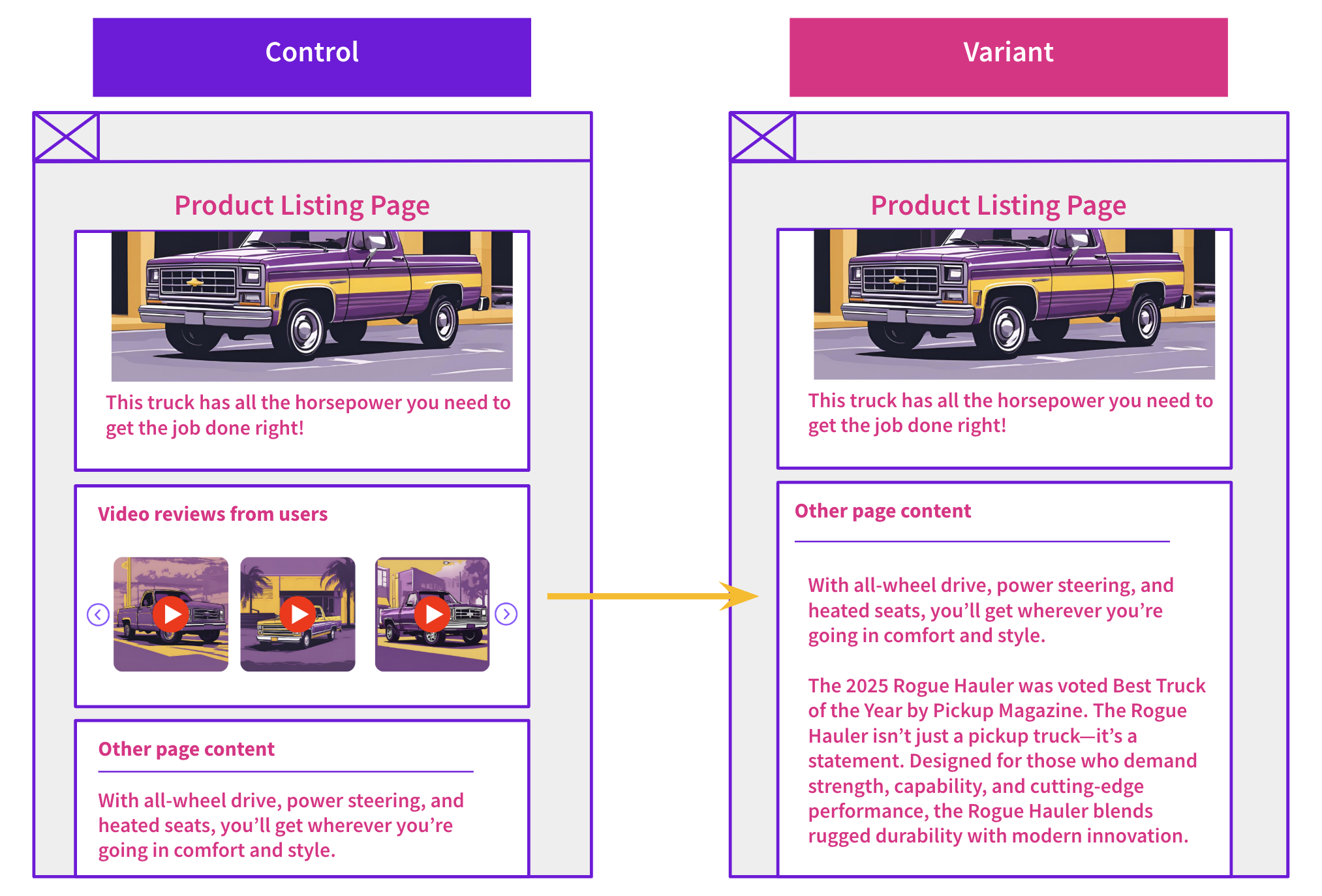

Testing the Value of Expert Video Reviews

On an ecommerce site, a set of PLPs contained an embedded carousel of “expert video reviews” (YouTube embeds) at the bottom. The test removed that video review carousel from the variant pages. Surprisingly, removing the videos produced a statistically significant +4.1% uplift in organic traffic on the brand-based PLPs. We then iterated this test on a different template (class-based PLPs), which had an inconclusive result.

This discrepancy was surprising, and most poll voters expected that removing the videos would hurt SEO.

These tests reveal that heavy multimedia usage (especially embedded video carousels), while valuable for conversions or UX, can be a drag on performance (page speed, layout shift), which, in turn, harms SEO. This shows that content valuable to users needs to be optimized beyond just being on the page.

While we didn’t test the CRO impact of this change, there is a good chance this content is valuable to users and important to the CRO team. This means that maybe the best way to improve the site overall is to speed up video loading rather than delete them.

For a more in-depth look, check out the full case study

Testing Rearranging the Title Tag

On a healthcare site, our test moved the page’s location (city/clinic name) to the very start of the title tag, rather than after the service name, to make key local information more prominent for users browsing SERPs. The result was a statistically significant +8.5% uplift (95% confidence), potentially driven by a ~0.7% increase in click-through rate (CTR).

This shows how title tag structure and information priority still matter in 2025; a seemingly minor change can have a significant impact. For local or service-oriented SEO, presenting the most relevant information (location) first can improve relevance and CTR, potentially outweighing small ranking fluctuations.

This shows how title tag structure and information priority still matter in 2025; a seemingly minor change can have a significant impact. For local or service-oriented SEO, presenting the most relevant information (location) first can improve relevance and CTR, potentially outweighing small ranking fluctuations.

If your pages target local intent, this is a low-effort, high-upside test to run. This also reinforces classic SEO best practice: put your strongest, most relevant keywords early in the title.

Interested in more details? Check out the full case study

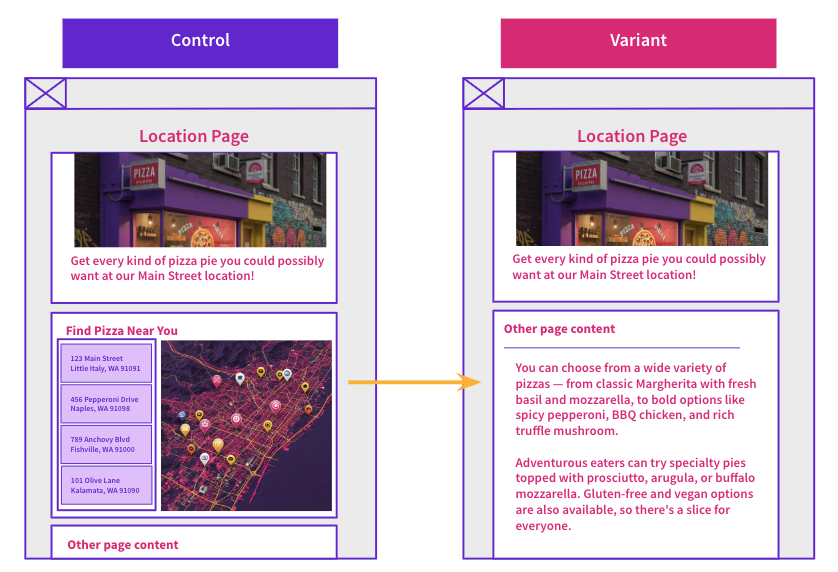

Testing the Value of a Map Component

On a site with many business-location pages, the pages included an embedded map showing not only that location but also nearby locations. The test removed the map entirely to see whether Google better recognized the pages as single-location pages (rather than multi-location “listing” pages). The outcome was a statistically significant negative result with a 7% drop in organic traffic.

This was a particularly interesting result for our customer because the hypothesis was to better clarify this page template’s intent to Google. A negative result suggests a considerable disparity between what Google thought the page was about and what the company felt it was about. This informed a whole testing series, making multiple types of changes to reposition the purpose of these pages in Google’s eyes.

Take a look at the full case study for a closer look!

Testing All-Caps Title Tags

On listing pages in the travel industry, the title tags were rewritten in full uppercase — to see whether the visual difference in SERPs would draw more attention and clicks. When we polled you all, many of you were skeptical, thinking this test would be negative or inconclusive, though in one aspect, you were right.

On desktop, there was no statistically significant change. On mobile, however, the test saw a +14% increase in organic traffic. Again, a relatively small change had a noteworthy impact, adding another example of the attention the mobile experience deserves.

On desktop, there was no statistically significant change. On mobile, however, the test saw a +14% increase in organic traffic. Again, a relatively small change had a noteworthy impact, adding another example of the attention the mobile experience deserves.

This test brought to our attention a relatively overlooked aspect of SEO: how users interact with SERPs differently on mobile versus desktop. Too often, we get caught up in the on-page mobile experience.

Interested in more? Check out the full case study

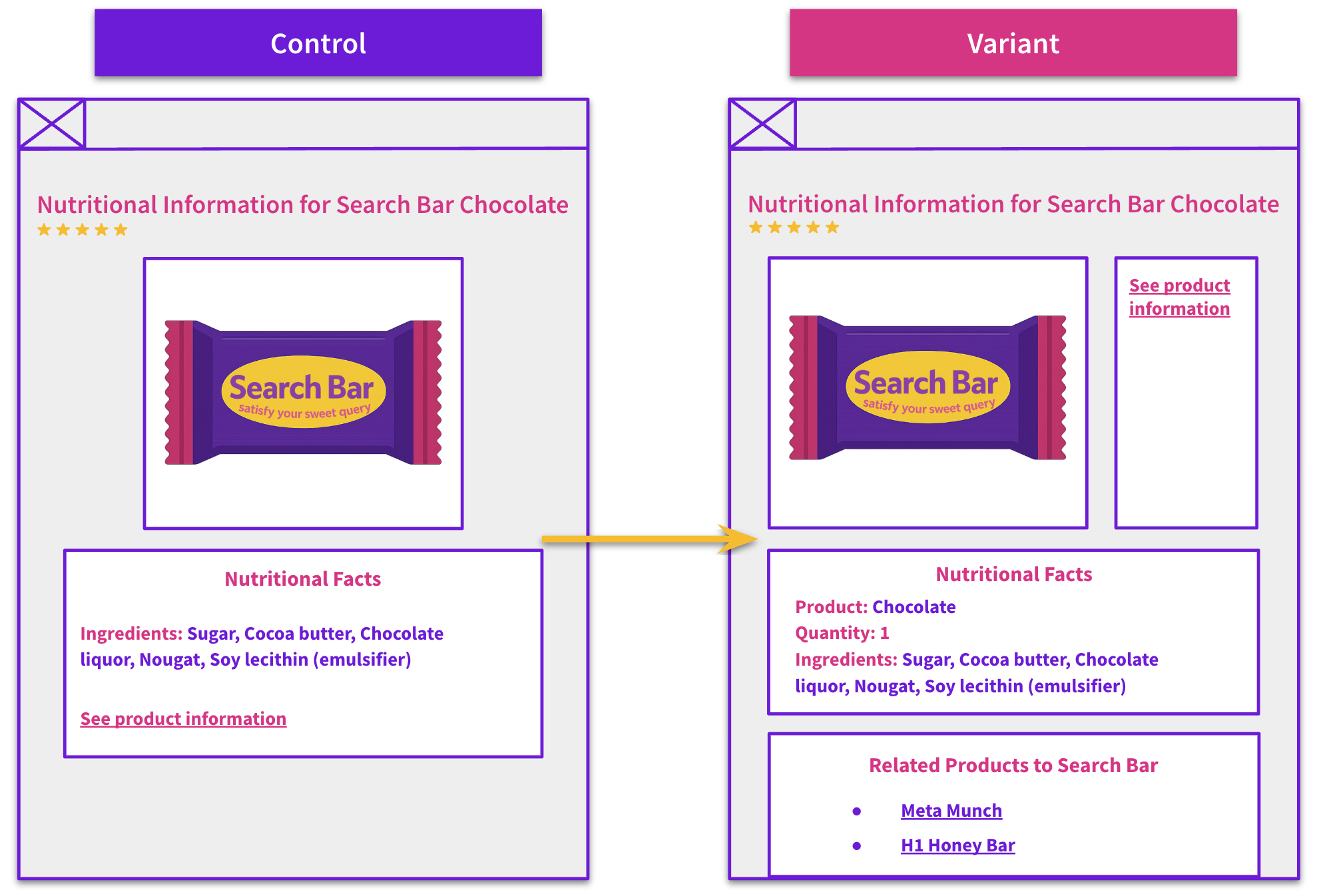

Testing Enriched Page Content

Nutrition information pages for an ecommerce brand that had historically had thin content were enriched by the team in this test. Adding structured detail content, such as specification tables and related products, along with greater depth, allowed us to test whether it was worth keeping these pages separate from the PDPs they referenced.

This test was one of our biggest winners of the year, with nearly a 20% uplift in organic traffic. Clear and helpful content is, of course, one of the central tenets of search engine optimization, which is why 100% of our poll respondents predicted this outcome.

This reinforces a core SEO truth: depth, relevance, and content richness still matter, especially for informational pages. For sites with thin page content, investing in high-quality content is certainly worth testing, if for no other reason than to prove the value of the effort required to generate it.

For an inside look, check out the full case study

Looking Forward to 2026

These few experiments we discussed here are just the tip of the iceberg. In 2025 alone, we ran hundreds upon hundreds of tests for our customers, gleaning insights from each one. And with more and more metrics and data streams being incorporated into the platform, those insights grow more robust with each passing day.

As we look ahead to the coming year and the hypotheses we will explore with our customers, we will hold true to our values and continue to prove the value of SEO to the world’s biggest websites in the only way we know how: by testing it.

To receive more insights from our testing, sign up for our case study mailing list, and please feel free to get in touch if you want to learn more about this test or our split testing platform more generally.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.