Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

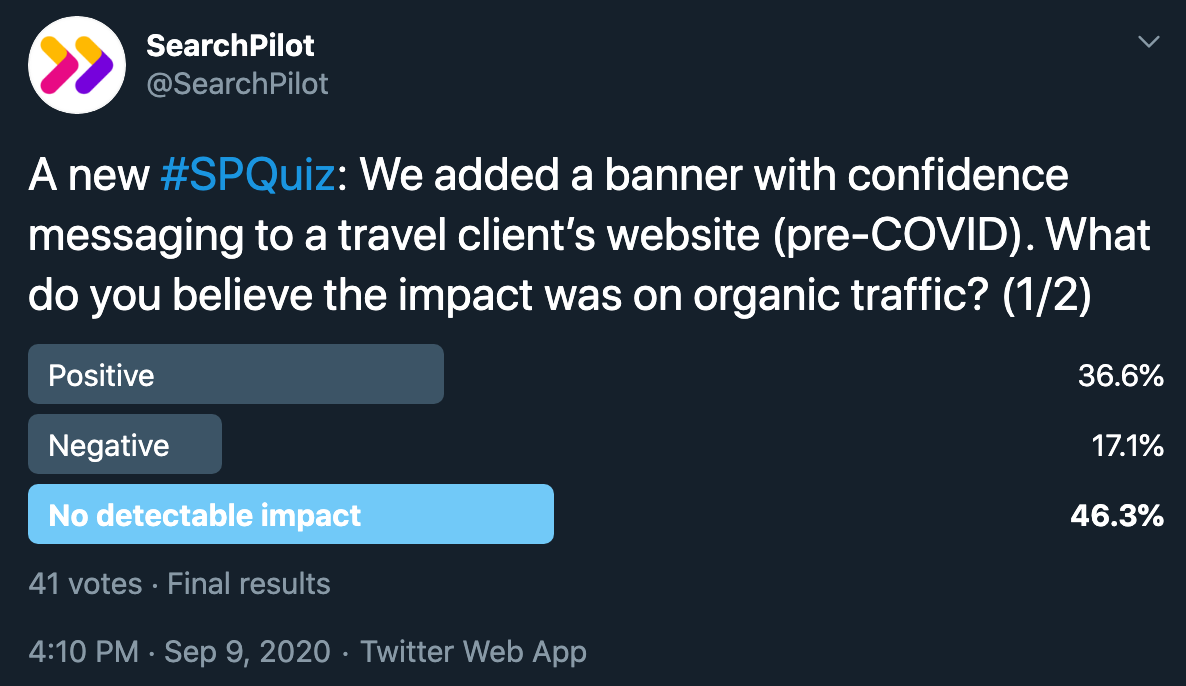

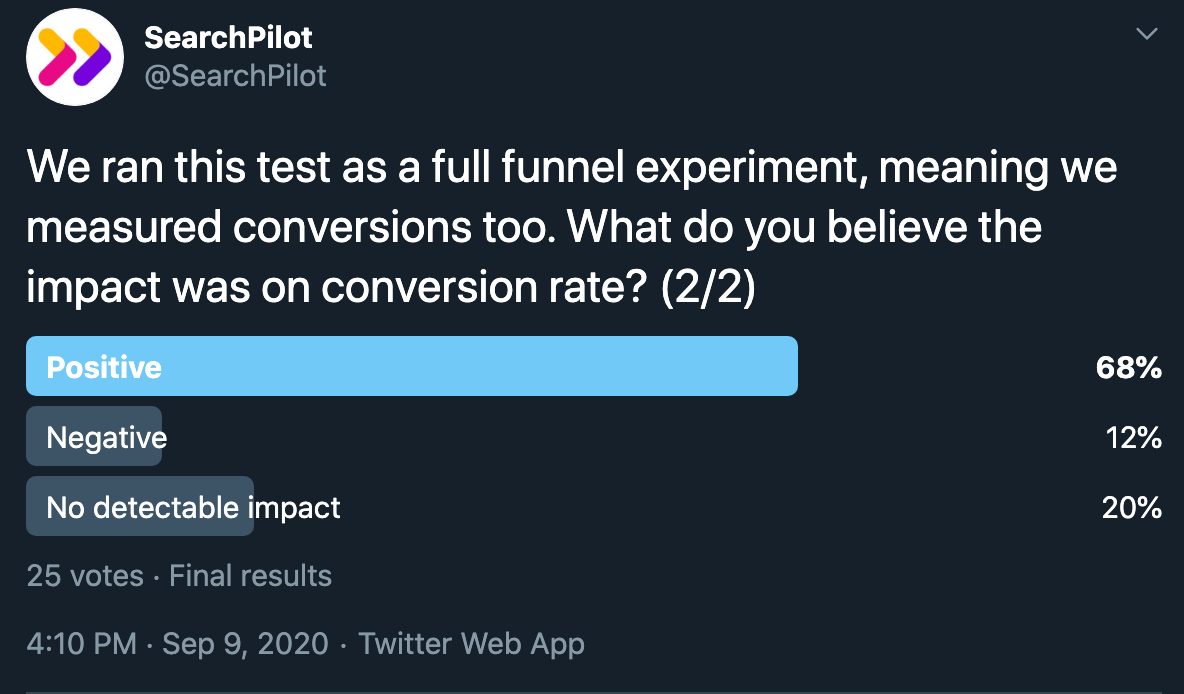

This week we asked our Twitter followers what they thought happened when we added a confidence banner to a travel client’s website (this test was run pre-COVID). The test was a full funnel experiment, so we measured the impact on user metrics (conversion rate and bounce rate) and on organic traffic.

The consensus from our followers was that this experiment had no detectable impact on organic traffic and was positive for conversion rate. This was partly correct - this test was positive for both organic traffic and conversion rate. Read below for the full case study:

The Case Study

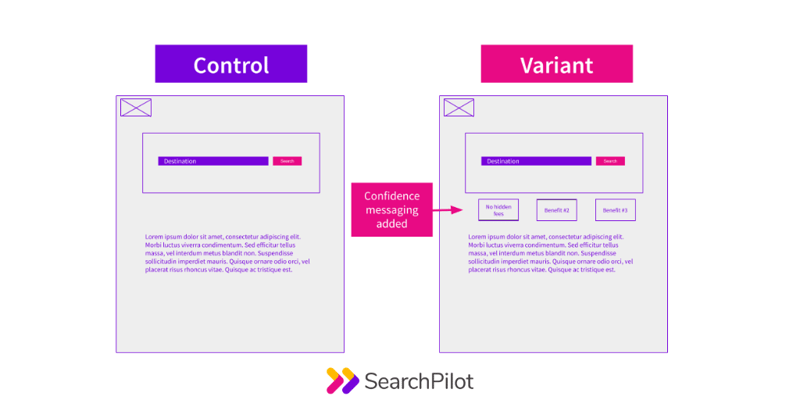

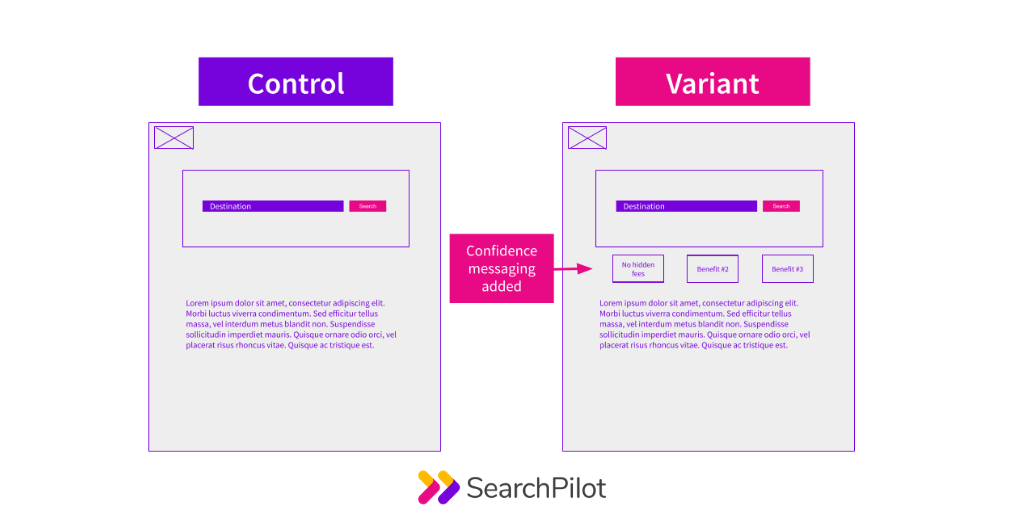

Can trust signals impact SEO performance? That’s what our client set out to answer with this test. Our client tested adding confidence messaging below their search bar and measured the impact on user metrics (conversion rate and bounce rate) at the same time as measuring the impact on organic traffic. They tested this on three different domains, Spain, Russia and France.

In our previous case study, testing page layout changes, we shared a test where moving the search widget on a travel client’s page had a negative impact on organic traffic. This case study of ours is one of many that we believe support the increasing importance of user signals for rankings.

In addition to user signals, many in the industry argue that the August 2018 core algorithm update placed further importance on E-A-T (Expertise, Authoritativeness and Trustworthiness), especially for Your Money Your Life (YMYL) websites. We can break down the E-A-T abbreviation as follows:

- Expertise - Requires content creators and editors proficient and knowledgeable in a specific area, but also to convey valuable information in a manner that is easy to understand and match users’ search intent

- Authority - Being linked from other relative, authoritative sites strengthens the authority of your site, as well as consistent shares across social media

- Trustworthiness - lack of trust in a website can lead to negative rankings. Attributes such as reviews, transparency in website policies, and proper link citations can help build a site’s trustworthiness

Google shares in its webmaster blog that search quality raters are trained to understand these guidelines, and use them to judge web content accordingly. The webmaster post also links to useful resources if you’re seeking to improve your content.

As our clients all fall within the YMYL category, E-A-T presumably holds a significant level of importance to their organic performance. A travel client of ours noticed that their competitors had all begun to implement confidence banners above-the-fold on their key landing pages.

Our client did not have any similar confidence messaging, despite having attractive unique-selling-points (USPs) like not charging fees, offering competitive pricing, and a range of custom filter options to tailor searches to each unique user’s needs.

These USPs were not prominently advertised anywhere on their website, so following the example of their competitors, we tested adding a banner with some key messaging below their hero image.

Note that this test was conducted in January 2020, before the COVID-19 pandemic impacted the travel industry, but we have since updated the banners to include relevant features, such as flexible booking options.

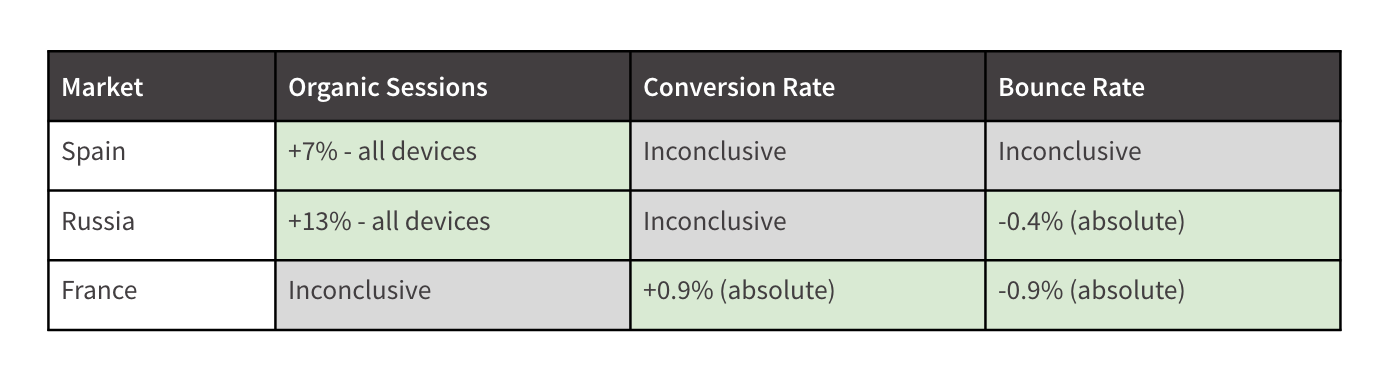

This test was run as a full funnel experiment, meaning we also measured conversions and bounce rate. These are the results for all of our metrics across the three domains:

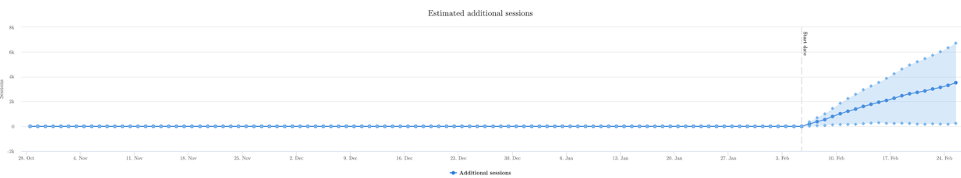

This was the result graph for organic traffic in Spain:

In addition to improving organic traffic, between 7% and 13%, this test led to an increase of just under one percentage point in France, and a decline in bounce rate of between 0.4 - 0.9 percentage points in both Russia and France.

Looks like the banner was a success! This test provides further evidence to support the increasing importance of user signals, and also that updating your content to improve E-A-T signals can lead to good results.

The great thing about this test was that it was a low effort change with a high yield effect. Being able to measure the impact of the change made it easy for our client to prioritize it in their dev queue, and push the content out quickly. Although it’s worth noting that we also have the capability to push out changes via our meta CMS functionality.

This test is also a great example of why it’s important to run tests as full funnel experiments. If this test was done using just a traditional A/B testing process, to just measure the impact on users, the team would have declared these experiments inconclusive in Spain and Russia, and missed out on the extra benefits to organic traffic.

To receive more insights from our testing keep an eye on your inbox, and please feel free to get in touch if you want to learn more about this test or about our split testing platform more generally.

Thanks!

SearchPilot

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.