Full funnel testing lets you measure the impact of a website change on both SEO and conversion rate metrics in one test. This lets you determine what the overall impact is on the most important metric - total revenue - taking into account the top of the funnel (how many people are coming to your website) and progress through the funnel (what they do once they arrive on the website).

At SearchPilot we’re constantly working to improve our testing methodology, including how we run full funnel tests. With this in mind, we have recently rolled out a major overhaul to how our full funnel tests work. This has allowed us to make the following improvements:

- Speeding up testing to run more tests in a given time period

- Removing any potential sources of external bias

- Integrating our preferred Bayesian analysis for the conversion rate optimisation (CRO) portion of tests

- Displaying the combined results in a new dashboard

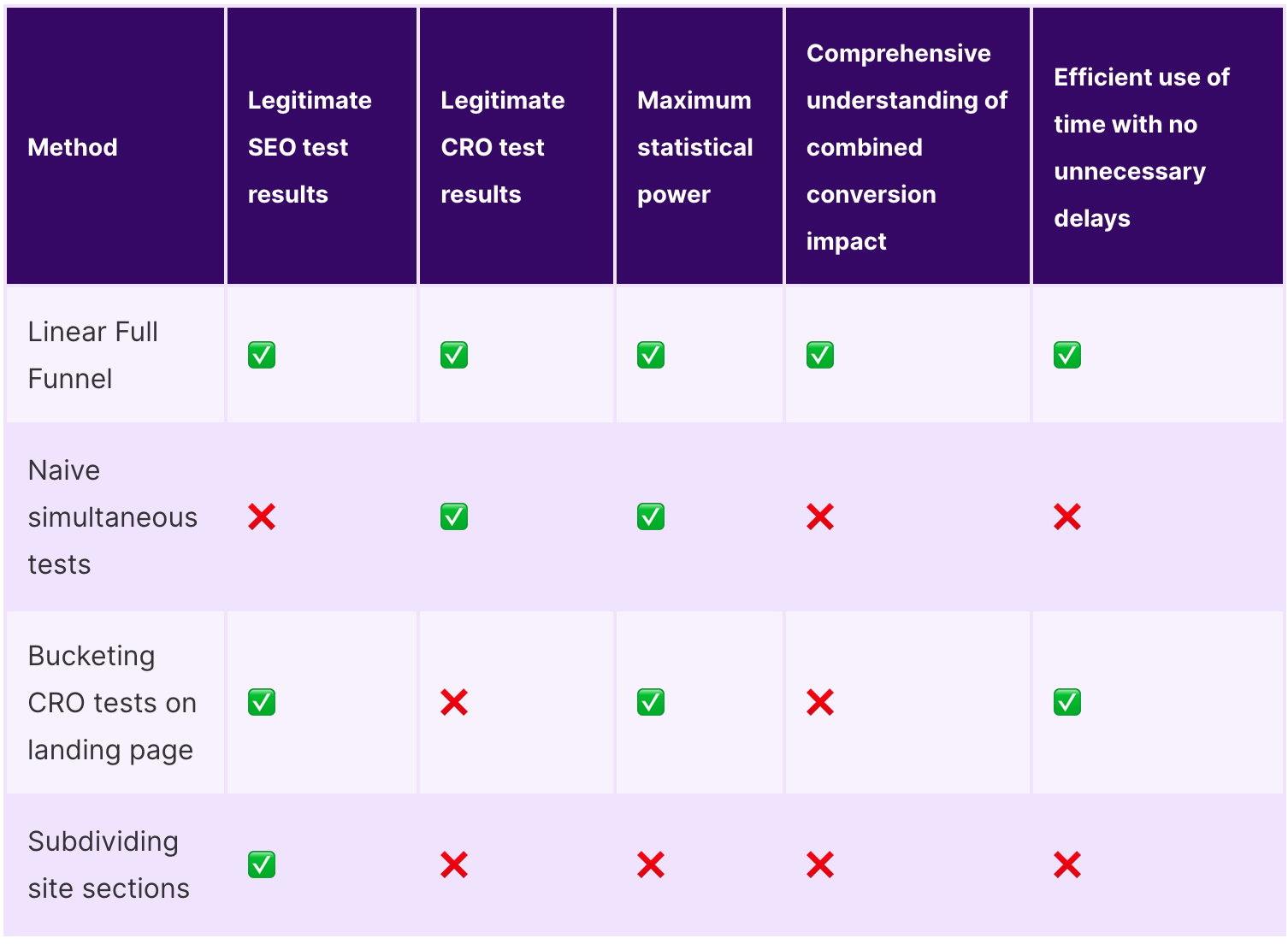

Before we get into the specifics of how our new full funnel methodology works, the first thing to understand is that this is a hard problem to solve. Any straightforward approach of combining CRO and SEO testing has some downsides, primarily because of the fundamentally different nature of SEO and CRO test bucketing. As a reminder, SEO tests split sections of websites into buckets on a page by page basis, while CRO tests split users. More details can be found in this post.

What approaches have we considered?

Here are a few of the different approaches one might consider, and why they don’t work. If you’d rather skip ahead to read about the optimal methodology we’ve developed, click here.

Naive simultaneous tests

Due to the way that SEO tests and CRO tests differ in their bucketing, if you were to run an SEO and CRO test of the same change at the same time on one group of pages, the results of both tests would not be reliable. This is because of the interplay of effects between user behaviour and SEO.

The main way this plays out is if the variant experience (from the CRO test) leads to better or worse user signals for Google, and especially if that impact is disproportionate on a particular set of pages. If the SEO hypothesis of the test was closely related to how user signals affect rankings, this would mean that the difference between control and variant pages being measured in the test being under-reported. This would lead to the CRO test polluting the SEO test result.

The other downside to this approach is that, even if you have enough data from one arm of the test to end it, you would still have to wait to gather enough data for the other part before you can end it.

Bucketing CRO tests based on landing page

One way to counteract that ‘pollution’ could be to bucket users for the CRO test based on the URL they first land on. This would mean that all users landing on a variant page from the SEO test would be bucketed as variant users for the purpose of the CRO test, and receive a consistent user experience from that point on. This was the original way that SearchPilot ran full funnel tests many years ago.

This did help with the issues of the naive simultaneous testing scenario, as any user effects could be attributed to the landing page that a user sees, and therefore any SEO impact of those user effects would be valid, but it also introduces other biases. This is because not all landing pages are equal in terms of conversion rate or other user metrics, so bucketing a CRO test by page would make the results unreliable.

One approach to overcome the underlying bias introduced by bucketing users based on landing pages would be to attempt to measure that bias in a period when the test isn’t running (known as the baseline period), and subtract that underlying bias from the observed result during the test. In theory, this would then give the true CRO impact of the test.

This was, until recently, the recommended methodology used by SearchPilot. However, this introduced other, subtler, forms of bias. For example, the conditions during the baseline period would have to be identical to the test period in order for these assumptions to hold true. This is very rarely the case, as individual pages may have different peaks in popularity, and things like pricing changes or other promotions can also influence this. The only way to have a truly unbiased test would be to randomly bucket users, as is CRO best practice.

Subdividing site sections

The other approach that we considered (but rejected) would be to subdivide a site section, and run simultaneous SEO and CRO tests, one in each subsection.

This approach has a few downsides.

It relies on the site section being tested having a very high number of pages and traffic. For SEO testing, the rule of thumb is that we need over 100 pages with a total of 1,000 organic sessions per day as a minimum in order to run an effective test. If we’re splitting a site section in half, those minimums are effectively doubled, which would potentially rule out a lot of site sections on a lot of websites.

The user experience during the test would also be compromised, which would mean the CRO test’s results could come into doubt. If a user is bucketed as a variant user in the CRO test, but navigates to a control page within the SEO test, they would see an inconsistent version of the page. This would then cause issues if they go on to convert - should this conversion be attributed to the variant bucket in the CRO analysis?

The final downside of splitting site sections is that it would force the SEO and CRO tests to run for the same duration, which may not be optimal. In general, SEO tests take slightly longer to run than CRO tests. Ideally we should be able to end a test of either kind as soon as we have sufficient data to make an informed decision, rather than having to wait.

Our new approach

With all of the above considerations in mind, the best approach that we have settled on is what we are calling linear full funnel testing. This consists of running a CRO test followed by an SEO test on the same set of pages.

This approach means that we have a “pure” CRO test with none of the compromises mentioned above, where we can be 100% confident of the results. We assess CRO tests using a Bayesian analysis methodology, which means we can end the CRO test as soon as we have enough data to be confident of the result. At this point, we transition to the SEO portion of the test.

.png?width=706&height=641&name=Linear%20Full%20Funnel%20Testing%20(5).png)

The SEO test is run as normal, and we can end it when we have enough data to understand the impact of the change on organic traffic.

During this period, we also start to monitor the combined effect of the change on SEO and CRO, using our full funnel analysis dashboard. This means we have insight on whether the change is beneficial for traffic, user conversions, or both, and can assess the net impact if CRO and SEO results are not aligned.

This takes into account the conversion impact on all traffic channels, against the traffic impact just on the organic search channel. Our analysis dashboard weighs up these factors to calculate the net impact on total conversions.

This timeline of events lines up neatly with our SEO testing methodology - generally we recommend a 1-2 week “cooldown” window between SEO tests in order for changes to be indexed by Google before the next test is launched. This is normally about the amount of time needed for a CRO test, so it does not slow down testing at all, and adds a whole level of insight into user behaviour that we otherwise would not have.

Interested to see it in action?

If this blog post has piqued your curiosity and you want to see a live walkthrough of how this works on our platform. Drop us a line here.