I’m often asked to contribute to articles predicting the future of SEO. Over the years I’ve predicted all kinds of things (including the importance of “mobile only” thinking and my view that natural language processing will be far more important than voice search), but I’ve recently come to realise that I can summarise all the trends by saying that the future of SEO looks more like CRO (Conversion Rate Optimisation).

This is true in two ways:

- Big picture: a lot more of our effort will be expended on satisfying user intent compared to being in the weeds thinking about machine-readable signals

- Details: the actual mechanics of the job will look more like the work involved in CRO

SEO has always been a balancing act between time spent trying to be the best result for your target search queries and time spent showing that you are (or look like you should be!) the best result. The more sophisticated the search algorithms become, the more these two things should align.

Maybe one day, the coupling will be so tight between what users are looking for, and the search engines’ ability to discern the best results, that we will only need to worry about user experience, and test results showing user preferences will suffice to convince us that a change will be good for organic visibility too. We’re not there yet, and I’m not sure we’ll ever get there because testing on your current users may not always extrapolate to the opportunities available in the wider market.

What does it mean to say SEO will look more like CRO?

There is a naive understanding of CRO as being just about testing. Anecdotes such as Google supposedly testing 41 shades of blue can lead to a false understanding of CRO efforts being about testing huge numbers of essentially random variations. In fact, the most effective CRO efforts involve substantial work in generating strong hypotheses based on data and research before testing the resulting outputs to determine the winners.

Although I do believe in the power of SEO A/B testing (obviously!), what I mean by saying that the future of SEO looks more like CRO is not just that it will involve a lot more testing than SEO has traditionally. I specifically mean that initiatives will tend to look more like successful CRO initiatives:

- Research and data analysis

- Hypothesis generation

- Test your strongest hypotheses

- Roll out winners

- Gather insights from test results to steer future efforts

What’s driving the change?

The search engines have always wanted their results to reflect user preferences, and part of what’s going on here is just that they are getting better at it, narrowing the gap, and reducing the opportunities for manipulation.

There’s a more subtle reason too, though, which is fundamentally caused by the increasing shift from rules-based algorithms to machine-learning-based approaches. When the underlying algorithm is rules-based, (“these signals, weighted like this, tell us which site is most relevant and authoritative for this query”), it’s possible to optimise for the rules themselves.

Of course, the rules were never published, but we could do work to expose them. There were two main complementary approaches:

Firstly, we could think about the problem from first principles, stay abreast of the latest in information retrieval theory, and build up an understanding of what “must” be going on. (Bill Slawski’s writing is the most valuable source of insights of this kind that I know about).

Secondly, using these insights, we could conduct tests on the black box and figure out where we were right and where we were wrong, and the relative importance of the different signals. Knowing that we were seeking underlying “rules”, it made sense for these tests to be carried out in the “lab” (i.e. on test websites / volunteer users) and extrapolated to general insights, or to be formed of correlation studies seeking real-world confirmation of relationships between the theoretically-important variables and actual outcomes.

The move from rules-based (“these signals, weighted like this, tell us which site is most relevant and authoritative for this query”) to objective-based (“dear computer, create the rank ordering most likely to satisfy searcher intent”) has a few relevant impacts:

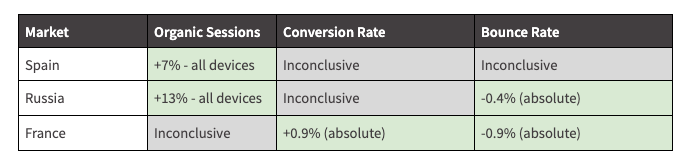

- It is much more likely that the signals and / or their weighting will vary across different kinds of queries and industries, limiting the generality of insights gathered through laboratory testing. You can see this in our testing case studies, where results gathered in one sector often do not apply in others.

- We have no reason to expect all the “learned” weightings will relate to human-understandable concepts and so we should not necessarily expect to have intuition about how to improve some of the factors even if we could peek inside the algorithm

- Even the engineers building the system are unlikely to know how it will respond to tweaks to features, so we should not expect to be able to derive rules of thumb from correlation studies on the black box

What’s going to remain different between SEO and CRO?

The biggest differences that I anticipate sticking around are both related to the existence of a crawling / indexing / ranking process sitting in between publishing an update to your website and it being visible to searchers. I’m going to use the shorthand of “googlebot” to refer to every stage of this end-to-end process. It means:

- The way we do testing is (and will remain) different. As long as the preferences of (your existing) users and googlebot aren’t exactly the same, we will want to run tests to see what googlebot thinks. The fundamental nature of this feeling like a need to impress a constituency of one means that it’s not possible to run CRO-like A/B tests that split the audience - which is why SEO A/B testing is different, and fundamentally why SearchPilot exists at all

- There are likely to remain some fundamental “robot-like” attributes of googlebot for some time to come, and so there will remain SEO work that we do only for googlebot (think: sitemap.xml, hreflang, robots.txt etc)

The future is here but not evenly distributed

To summarise, in the past we were limited to correlation studies and lab experiments based on hypotheses constructed from academic research and first principles. These were inherently limited because correlation studies lacked insights into causality and lab experiments were disconnected from the real world.

At the time, this wasn’t too much of a problem, because there were underlying rules that we could discover, learn, or intuit, and these rules corresponded to concepts that we could understand and seek to apply to our own sites.

With that connection breaking down, some companies are turning to a toolkit that looks more like CRO:

- Research and data analysis

- Hypothesis generation

- Test your strongest hypotheses

- Roll out winners (and some inconclusive results! See my memo business not science)

- Gather insights from test results to steer future efforts

Our experience working with some companies that operate like this is what makes me believe that this is the future of SEO - at least for large websites. The difference between this kind of operating model and the ways we have all done SEO in the past include not only improvements in outcomes from detecting surprising uplifts and avoiding surprising losses, but also the organisational benefits of being able to show evidence that your efforts are bearing fruit and sustain focus and investment.

If you want to explore the future of SEO for your organisation, we’d love to chat! You can ask me any questions you like on twitter or get a demo of SearchPilot here.