Most companies still do a lot of guesswork when it comes to SEO. They default to best practices, assumptions, or vague statements from Google itself. Historically, this was the standard way of doing SEO. As a digital marketer, you’ve come up with a list of desired website changes and then prioritized them based on the assumed potential impact. While this may be common, it’s not the most effective method.

We recommend running SEO split-tests instead and having a high testing cadence. This is because research has shown that the distribution of the results of website tests is fat-tailed, meaning you are more likely to get outlier results than a normal distribution would suggest. The rough summary of the implications of this research is that you’re better off testing more changes more rapidly because you’re more likely to find the bigger winners in the fat tails that way. Because they are more likely to be outsized, it is ok to test more rapidly even at the potential expense of statistical certainty. With normally-distributed results, it may make sense to test more slowly focusing more on statistical power.

Not to mention if you already have an edge over your competition, you want to expand that edge by running as many tests as possible and iterating quickly.

In this post, we’ll share how to start SEO split-testing rather than make untested website changes.

How does SEO split-testing work?

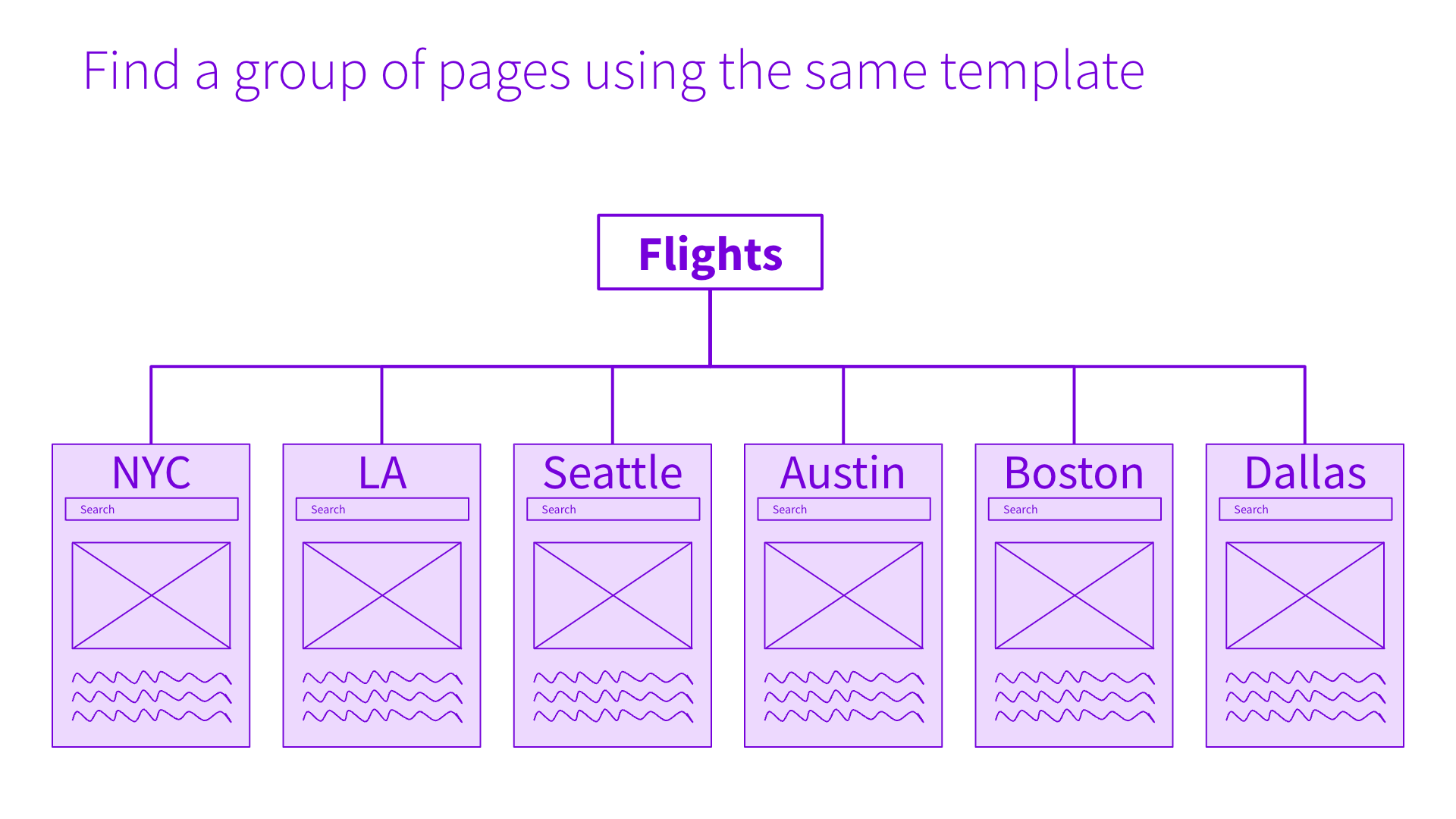

SEO split-testing is when you divide pages of similar intent on your website into two groups. You then make a change to one group and monitor the impact it has on a key SEO metric, such as organic traffic (here’s why we focus on traffic over rankings) and their connection to business metrics like conversions and revenue. The goal is to identify changes that positively impact SEO metrics. This is what we call a “winning test”.

Here’s how to start running SEO split-testing:

- Which pages do you want to focus on?

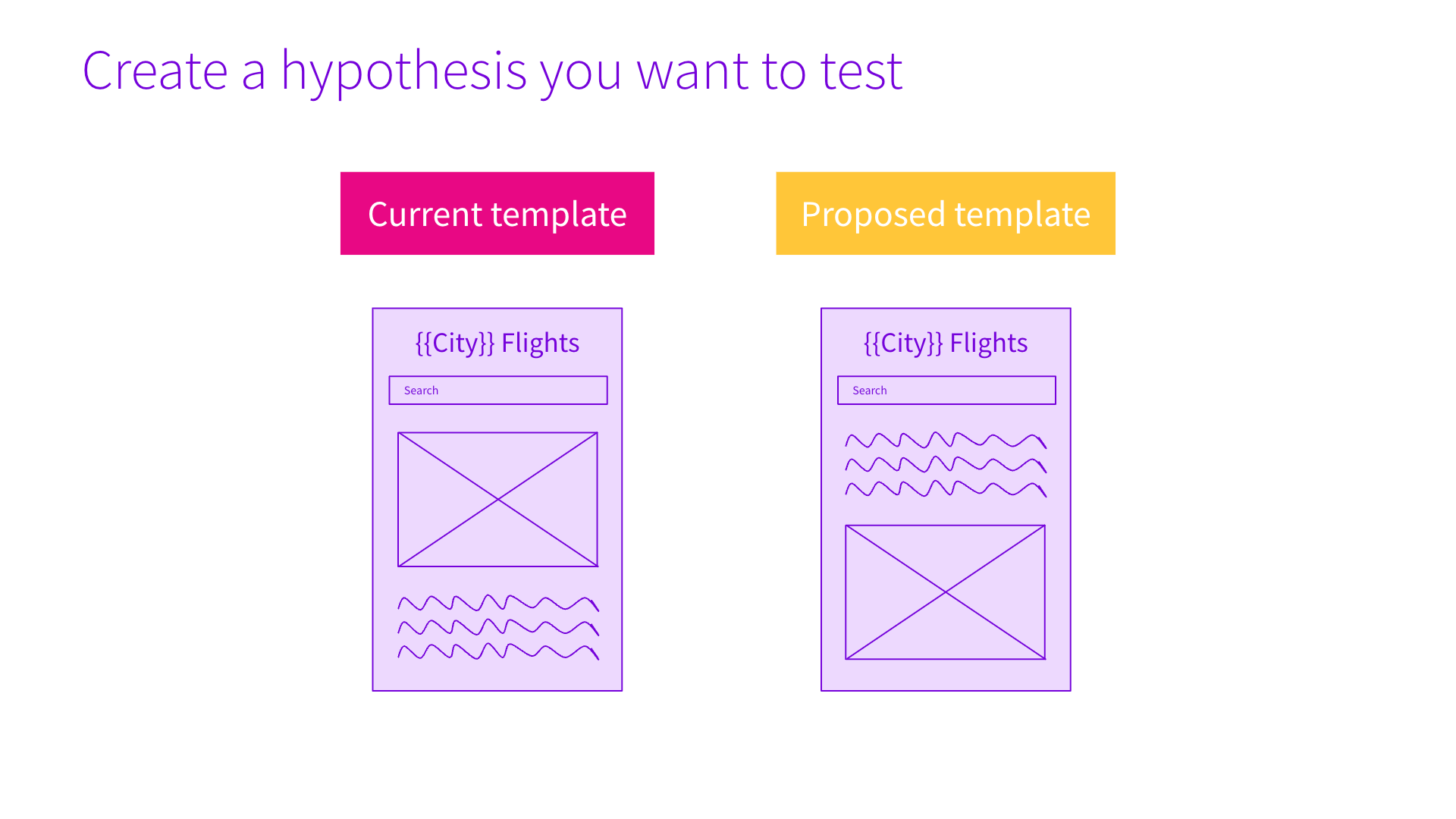

- What’s the hypothesis?

- Which pages should be in the variant group?

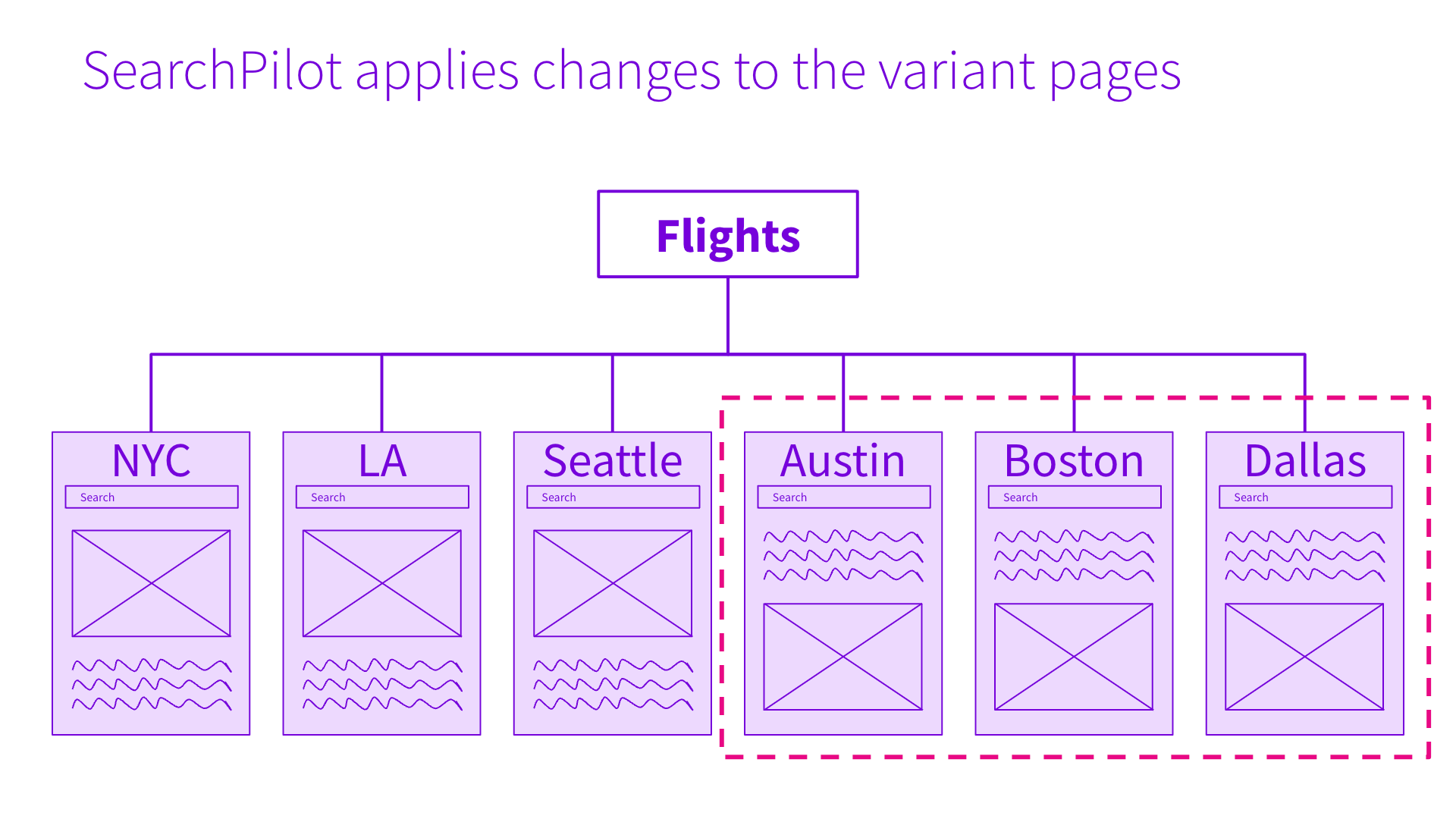

- How are changes applied?

How is an SEO split-test different from a CRO test?

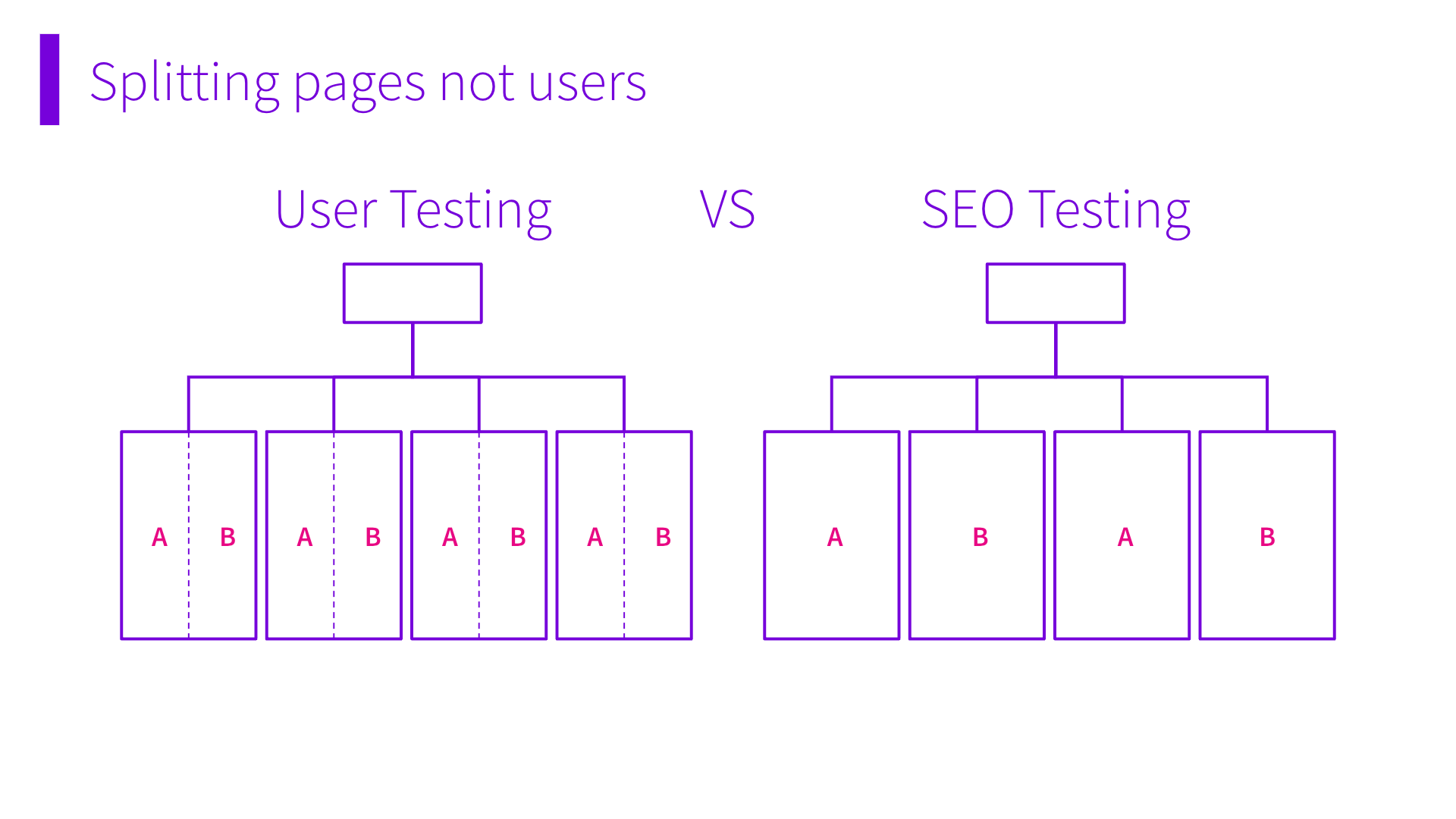

At first glance, SEO split-testing can sound a lot like a CRO test. The key difference is that in a CRO test, you’re splitting users into two camps. 50% of users see headline A, and 50% of users see headline B.

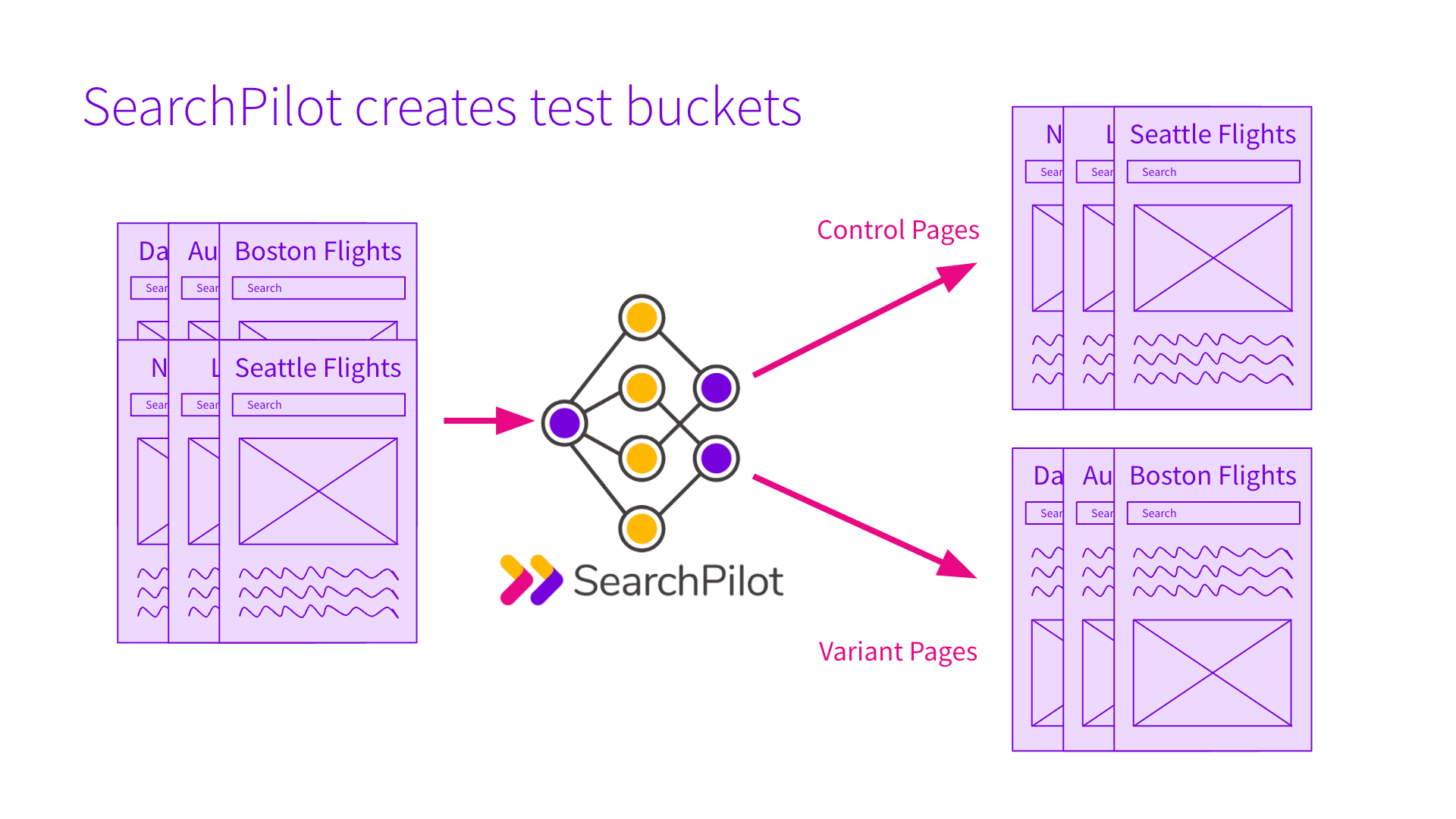

In an SEO split-test, you’re splitting pages, not users.

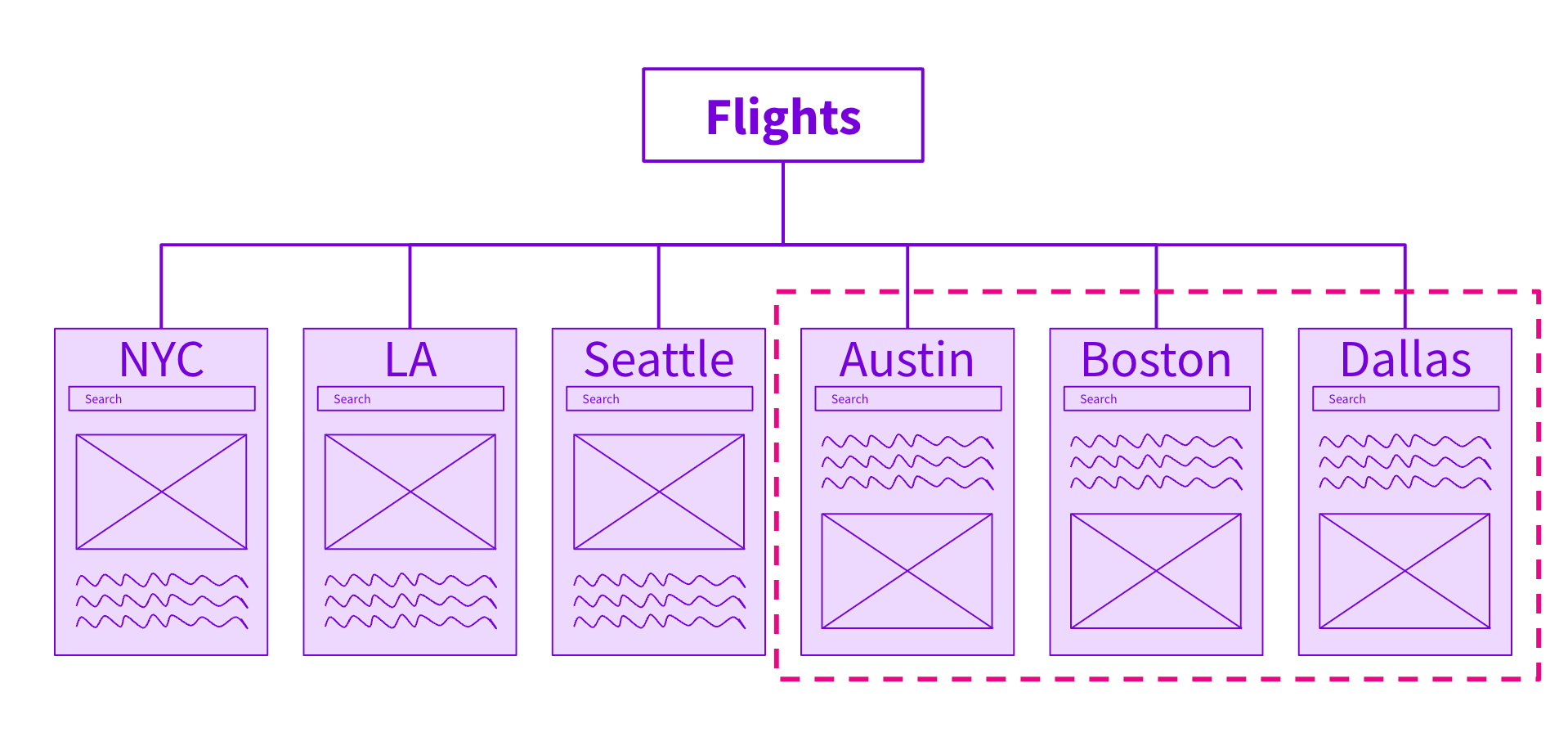

As shown in the example below, we’re making changes to our flight pages for Austin, Dallas, and Boston. We’re then going to see if those pages perform better or worse than the NYC, LA, and Seattle pages.

Note that in a real test we would typically be working with much larger groups of pages.

By making changes to groups of pages, we can then see how those groups perform relative to each other from an SEO perspective.

Next, we’ll dive into a helpful mindset for SEO-split testing called the Moneyball Mindset.

What is the Moneyball Mindset?

I recently gave a talk at Mozcon about how to think about SEO split-testing. I called it the moneyball mindset to articulate the “winning shots” of SEO split-testing.

If you’re familiar with basketball, you know there are three types of shots:

- Close-range shots near the basket that are highly likely to go in (dunks and layups)

- Mid-range shots that are less of a sure thing

- Three-pointers that are worth more but harder to score

We consider winning tests to be the dunks and layups of the SEO world. We’re confident if we make those website changes, we’re going to get great results.

Creating new pages or site sections is like making a three-pointer. It is necessary and hugely valuable but harder.

All of your untested changes are like the mid-range shots in basketball. They can seem attractive, but they contribute a surprisingly low percentage of points. It wasn’t until basketball had advanced statistical testing that they realized how inefficient mid-range shots were for most players, and as a result, saw the bifurcation into teams taking a lot of 3s and a lot of shots around the basket, but far fewer mid-range shots than they used to.

If we extrapolate this into SEO split-testing: your effort would be better spent on creating new pages (3 pointers) or testing more changes to focus effort on the things that work (dunks).

Sometimes you have to shoot the mid-range, of course, and it’s the same with untested website changes. For instance, on smaller websites, we still have to do untested onsite changes because there may not be enough data or we can’t consistently test those changes.

At the strategic level, marketing leadership should invest in a higher testing cadence. This means you’re not just going for the fancy shots like three-pointers, and you’re not just guessing in the mid-range, but you’re ensuring that you are taking enough precise and close-up shots that are sure to result in “points”.

Read more about our Moneyball mindset for SEO-split testing.

Making SEO Split-Testing More Scientific With SearchPilot

In our experience, if you’re changing something on your website because you believe it’s a good idea for search performance, then you will benefit if you can first test it in a controlled environment.

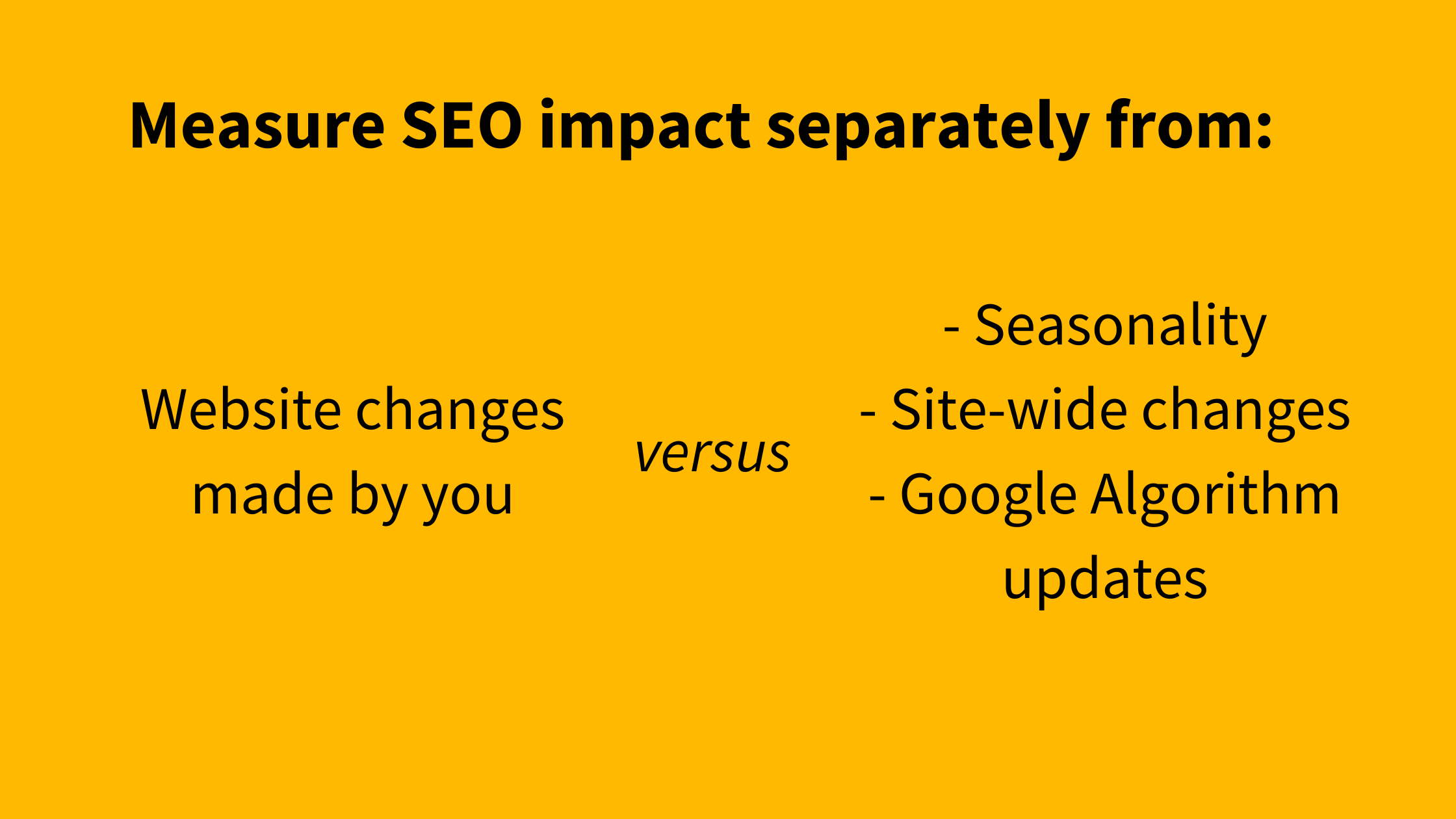

With the help of SearchPilot, you can control and isolate the impact of a website change you made. No more having to decode what was the direct result of the change vs. seasonality, site-wide changes, or a Google algorithm update.

If you’re just getting started with SEO-split testing, be aware that when you increase your testing cadence in a controlled environment, you are going to get more neutral and negative results. These can actually lead to additional interesting insights. For instance, we’ve seen many tests have neutral or negative results, even when these tests implemented “SEO best practices”. Negative outcomes are still helpful. They prevent you from rolling out a change that would negatively impact your search performance - a real drag on many companies’ organic search outcomes.

Similarly, even the neutral outcomes can lead to some interesting discoveries that you wouldn’t have learned another way. For instance, when you discover something isn’t valuable, then you don’t need to have your engineering team build and maintain the permanent feature. This can save you time and energy to work on more beneficial website changes.

Ready to start SEO-split testing or level-up your testing cadence? Schedule a free demo today.

.png)