In recent years, there has been a sea change in the way that professional sports teams are coached and managed as a result of more available data and new statistical techniques for making the most of it.

I’m going to argue that the rise of testing capabilities in SEO is causing a similar sea change, and that everyone responsible for organic search performance for large websites should be aware of these changes and building them into their plans.

This post is based on my talk at Mozcon 2022. You can see the full slidedeck here (and if you want to watch me present it, the full video is embedded at the end of this post):

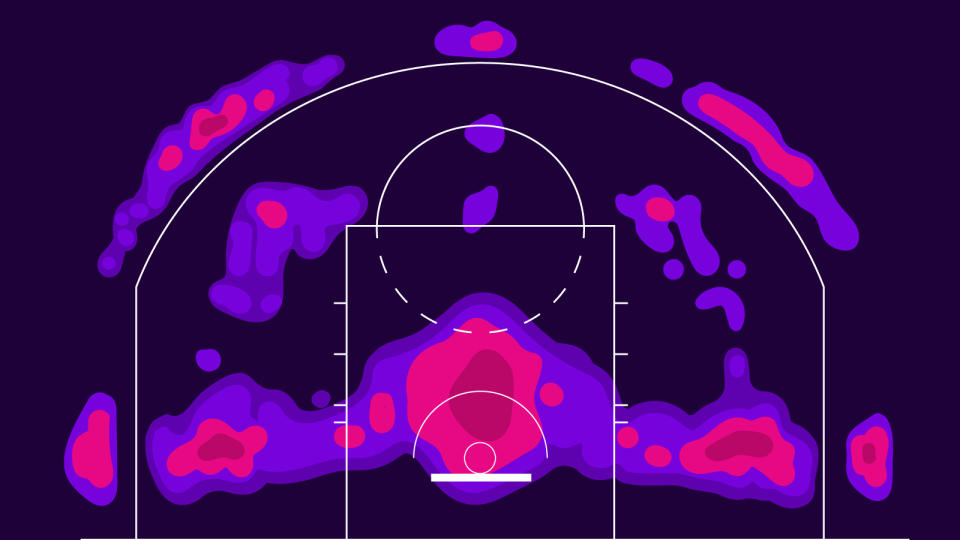

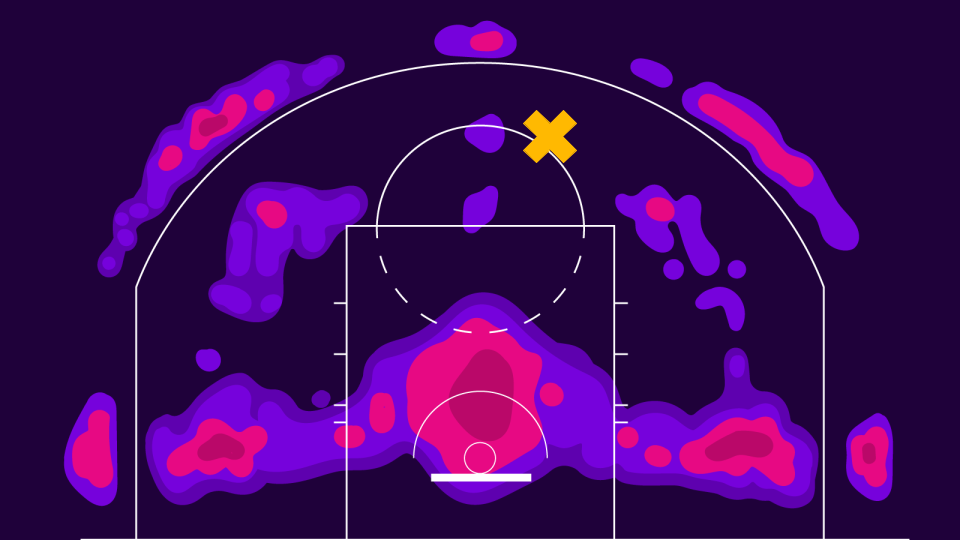

Let’s start with the sports background – Basketball is the sport I know best. This is a shot chart, and it shows the 200 most common places on the floor that professional players shoot from:

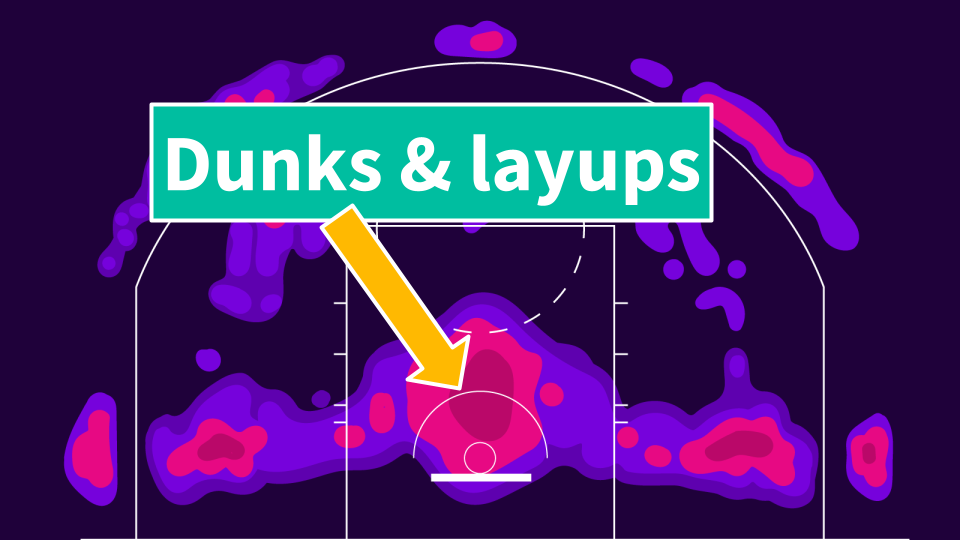

You can see three specific kinds of shot. Dunks and layups around the rim:

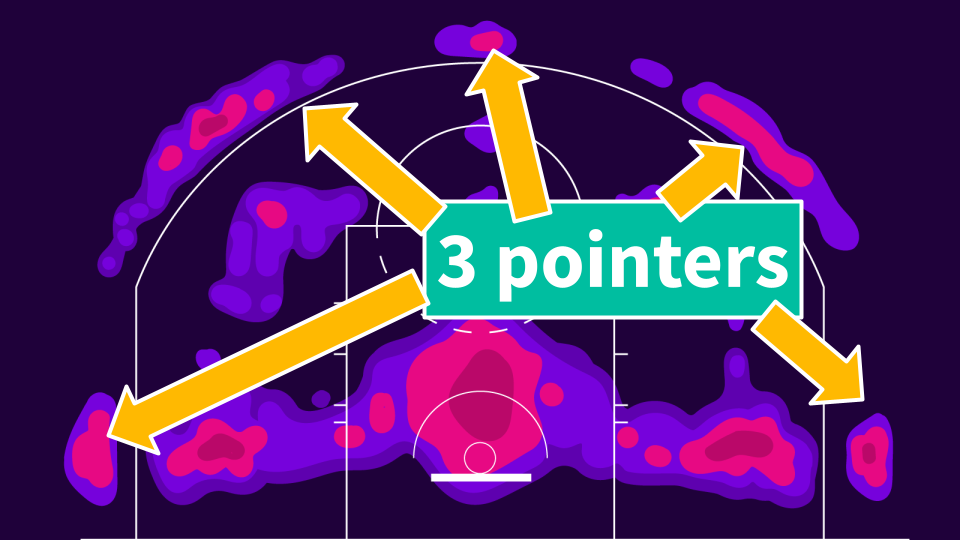

Three pointers, from outside the arc:

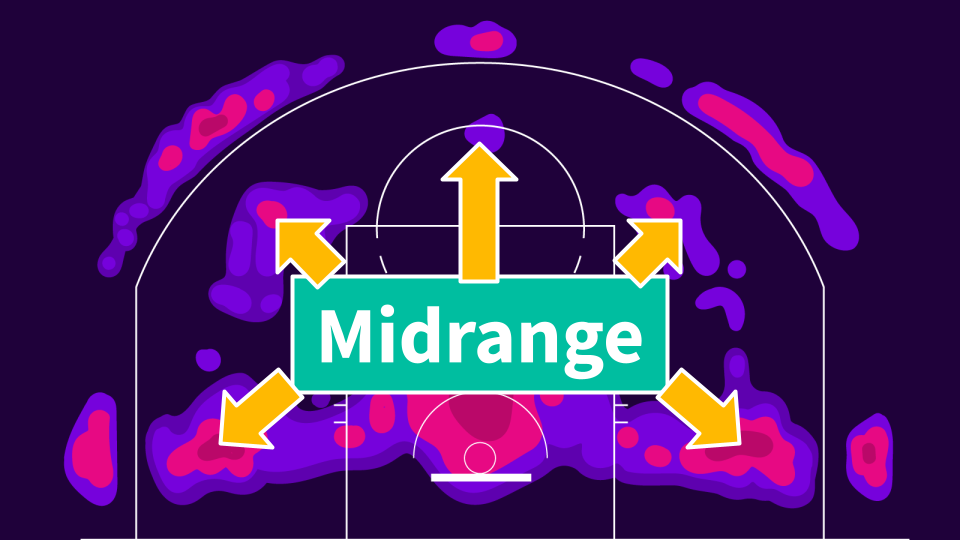

and what’s called the mid-range, which is all the shots in between (if you don’t follow basketball, these are worth two points, like the dunks and layups):

Some of the most famous shots of all time are from the mid-range. This, for example, is Michael Jordan winning a championship from right here:

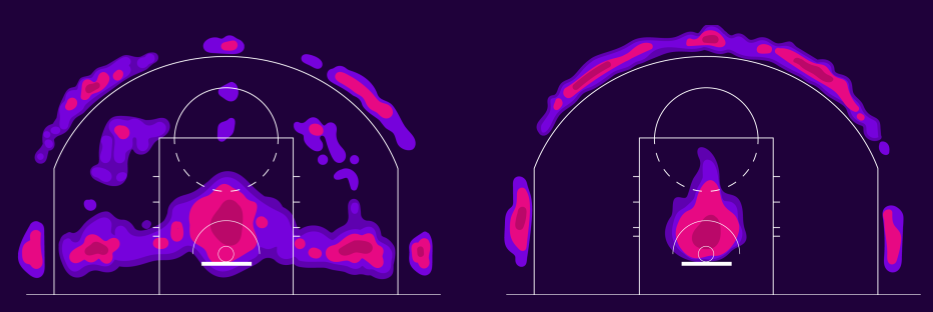

But actually, this is what the game looked like 20 years ago. Today it looks dramatically different:

This is quite a stunning change. Here it is side by side:

So what changed? Well, it started with every single NBA arena installing advanced cameras backed by machine learning systems that can track every player, and the ball in real-time throughout the game, and also keep track of all kinds of other details like how closely guarded they are, which way they are moving, and how fast, what move they are making, and of course whether they made the shot or not. With this wealth of new advanced statistical data, the analysts realised that mid-range shots were far harder than had been understood up to that point. With the full information to hand, they could see that in real game time situations, mid-range shots were almost as hard as three pointers, but obviously weren’t worth as much:

Dunks and layups are high enough percentage shots to be worth taking even though they’re only worth two points, and three pointers are worth taking despite being harder because they’re worth 50% more. But in many situations, at the elite level, as soon as a player steps inside the three point line, they should be trying to get to the rim (the actual story is a bit more complicated at the top).

What’s the SEO analytics revolution?

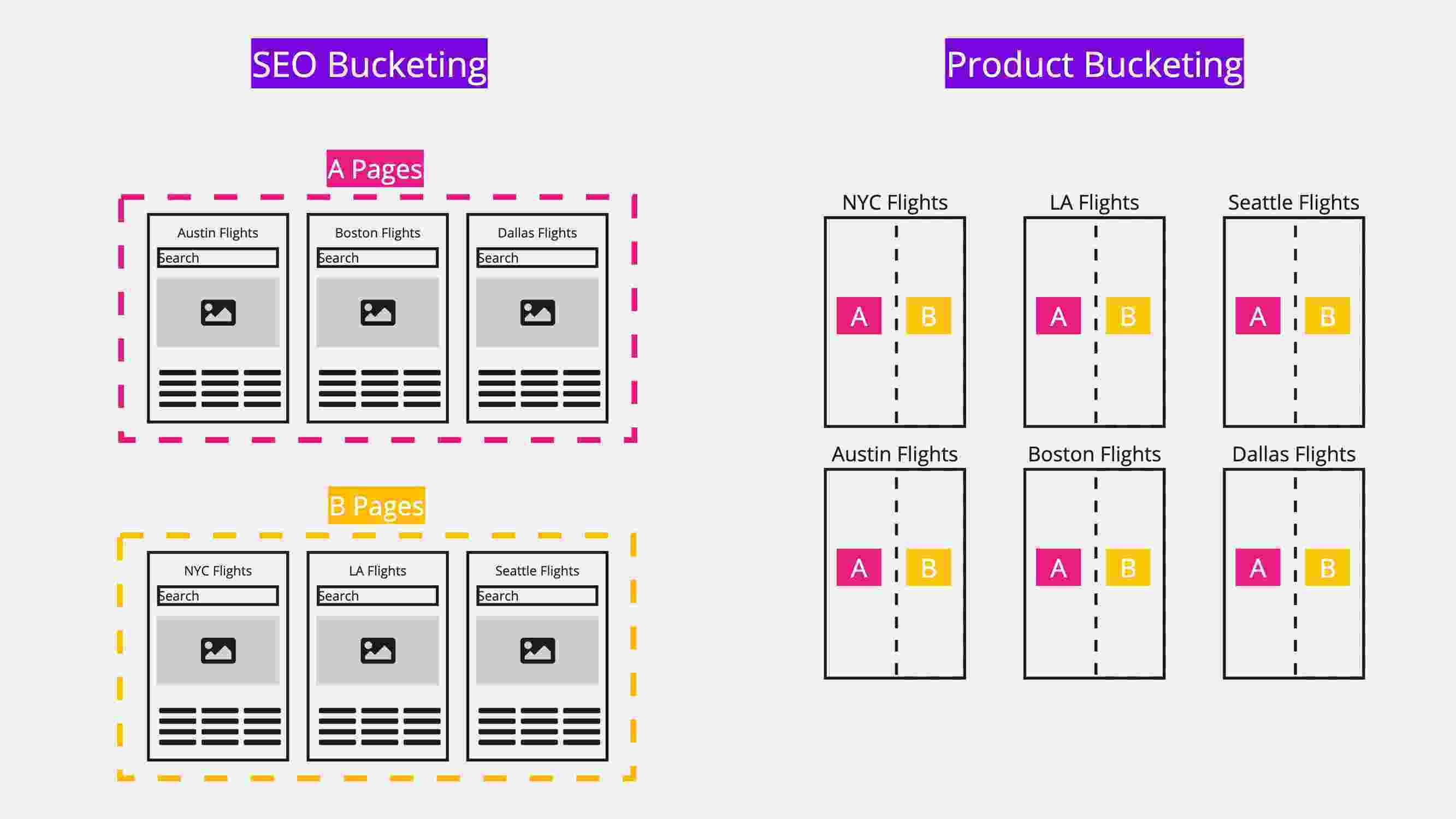

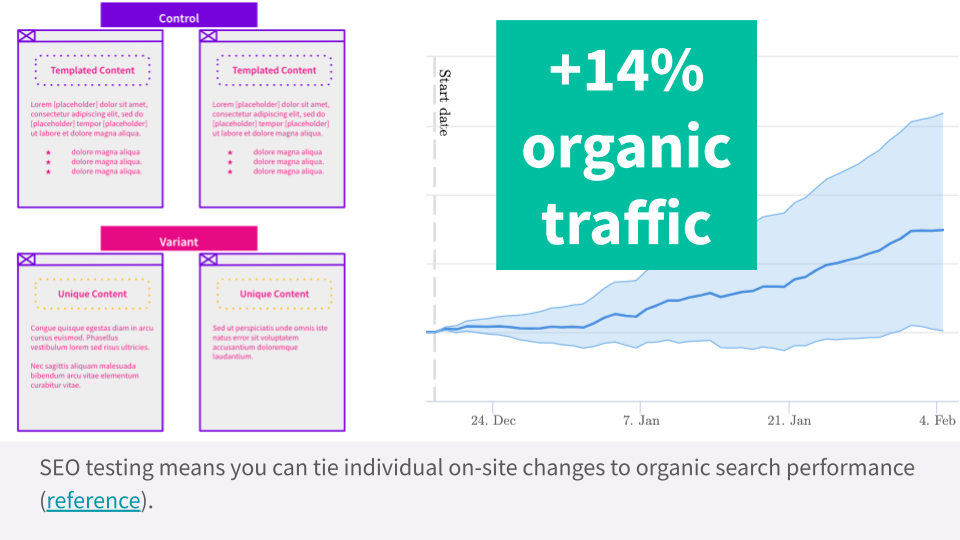

As you will know if you have read any of my work in recent years, we have been working on SEO A/B testing technology which enables us to measure the statistical effectiveness of individual on-site changes - in much the same way that the NBA’s cameras and statistical advances let them measure the probability of making specific shot types.

My analogy to the core mid-range insight is that winning tests are our equivalent of dunks and layups:

Three point shots, then, in our world, are new pages and site sections - highly valuable, with less certain payoff until you’ve tried them out.

The mid-range - the kind of seemingly-attractive, but actually often-damaging shots - are untested on-site changes and I’m arguing that large websites with sophisticated teams should be reducing their reliance on them:

Are untested on-site changes really that bad?

In short, yes. We see an incredible number of changes that we would historically have simply recommended teams roll out turn out to be dramatically negative.

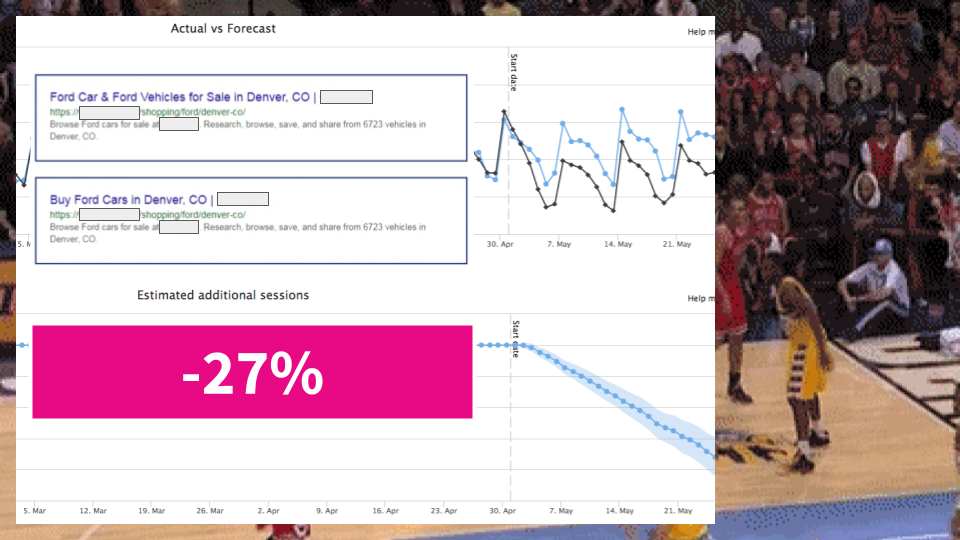

To continue the analogy, what happens is that you cross over - you find an amazing insight via keyword research:

You pull up for the shot (modify titles and meta data to match the search intent you have just discovered):

And AIRBALL:

For example when we modified titles to target the ways people more often search and removed some spammy-seeming repetition, and managed to cause a -27% drop in organic traffic.

How does SEO testing work

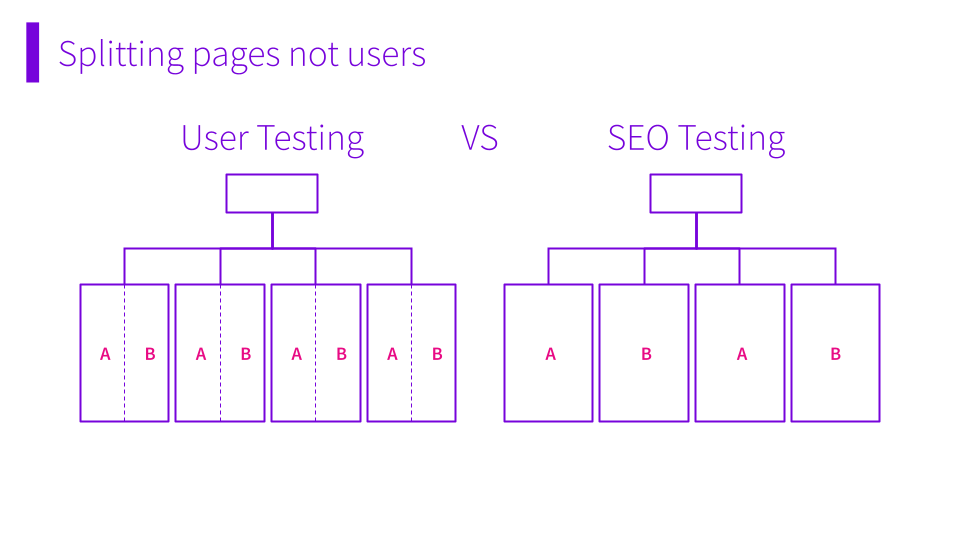

I’m not going to get into all the technical details in this post because we’ve written it all up in great detail in its own article, but the key thing to realise is that it’s different to user-centric or conversion rate testing:

If you’d like to see our testing technology in action, get in touch to get a demo.

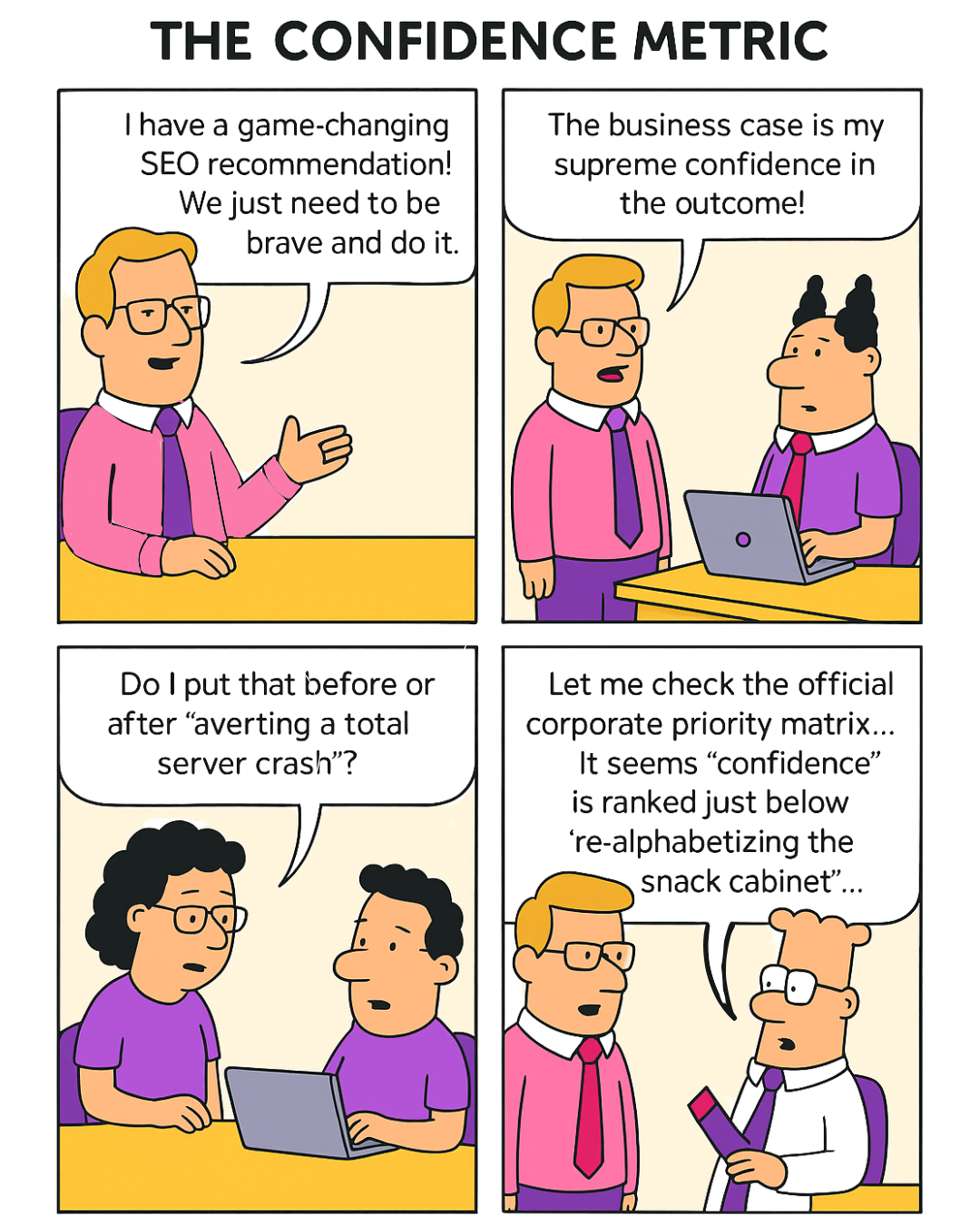

Can we evaluate changes by eye?

Traditionalists in sports have made the argument that they don’t need advanced statistics, and what they call the “eye test” suffices - by watching players and evaluating plays the old-fashioned way, they can get all the insights they need. Charles Barkley is a classic example of this line of reasoning:

For a long time, we have had no option but to work this way in SEO as well. When I got started in the industry, I used to contribute to expert “ranking factor surveys” detailing what experts thought worked and didn’t. I’ve also given presentations about the art of deconstructing Google’s official and unofficial statements to figure out what really works. And of course we have the marketing equivalent of the “eye test”: looking at analytics to try to figure out after the fact if a change was a good idea.

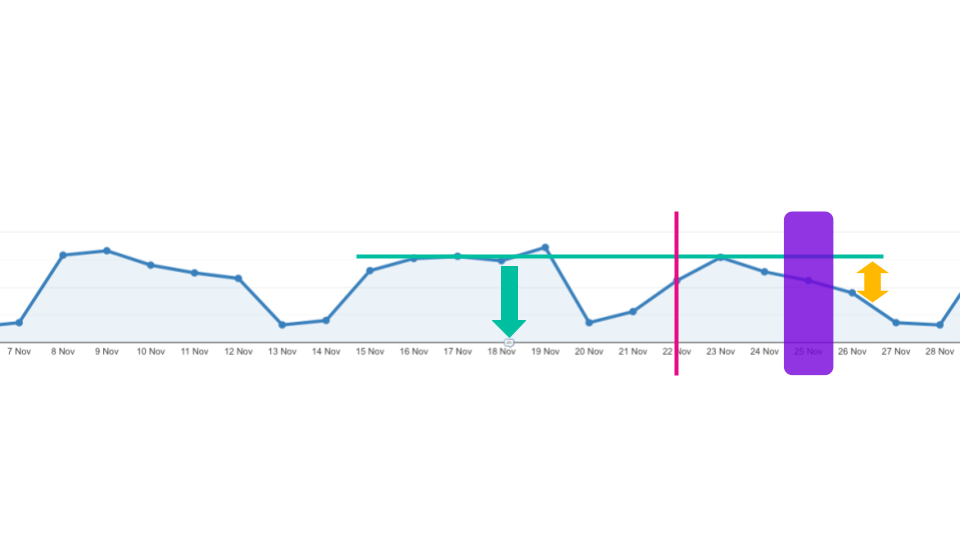

To highlight how difficult this is, and all the things that can confound an analysis, consider this analytics chart taken from SearchPilot’s own google analytics data after we made a change based on my intuition and a bit of keyword research (ironically, running a smallish B2B SaaS website, we aren’t in our own target market and typically can’t run SEO tests on our own site! This is a bit like how I personally can’t dunk any more, and never could shoot the 3 effectively so I kind of have to shoot the mid-range!).

This is what the analytics looked like in the week after the change - showing a drop, but complicated by an algorithm update rolling out at an inopportune time (the pink line) and compounded by blips in the data caused by Thanksgiving (the purple highlight):

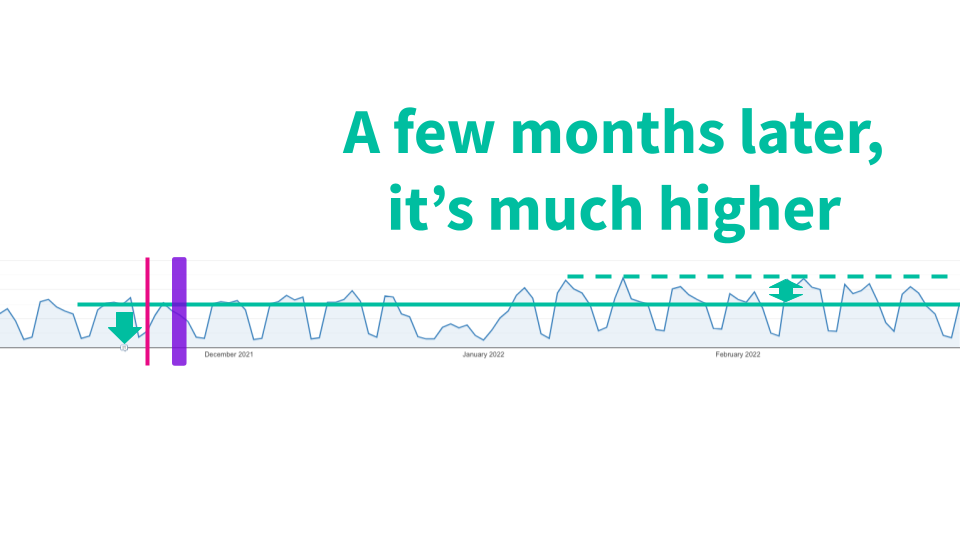

Of course, we could try segmenting the data further, looking year over year, and trying other tricks to figure out what’s going on, but given our growth, none of those are conclusive. Zooming out a bit, we see that after the initial drop, traffic was actually up over the subsequent months (once the holidays were out of the way):

All of this shows the importance of testing (and if you haven’t seen it in action yet, and you are responsible for organic search performance for a large website, you should get in touch for a demo).

How do we build a modern SEO strategy with this in mind?

Strategy exists at different granularity at different levels in the organisation. I’ve written before about:

- What the C-suite needs to know about SEO

- What marketing leadership needs to know about modern SEO

- Tactical lessons from 100s of SEO tests - for marketing practitioners - you can get individual test results delivered to your inbox by signing up here

Boiling this argument down to its fundamentals, though, I’m arguing that teams responsible for large websites should spend less effort, energy and budget on untested on-site changes, and should focus their strategy on rolling out winning tests (dunks!) and creating new content and site sections (3 pointers):

There is one more strategic insight: speed matters

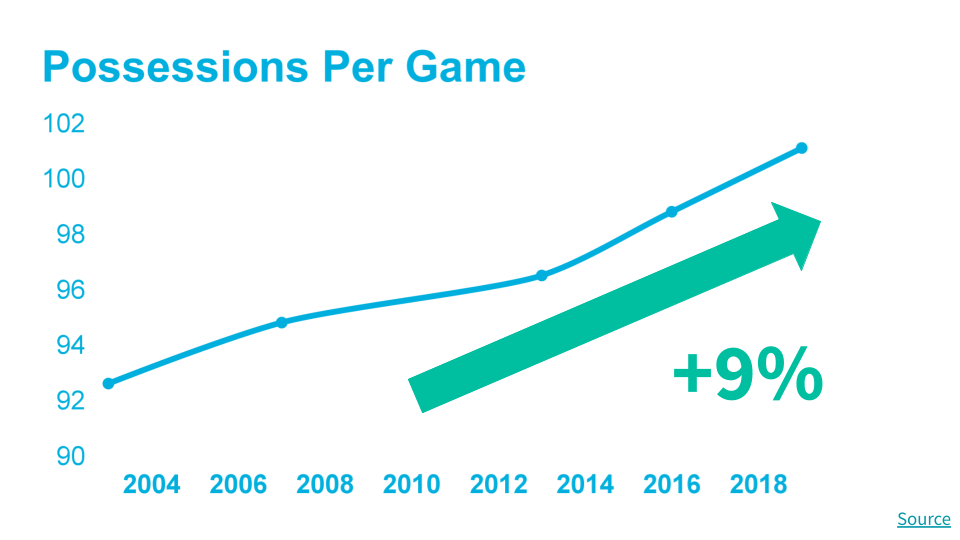

The same analysts who deconstructed the mid-range jumpshot also realised that when they had the edge over their competitors, because they were now on average likely to score a tiny fraction of a point more on average per possession, it was in their interest to speed up the game. And that’s what they did. Over the same 20 year time period, the average game has increased in cadence to the point that teams now take on average 9% more shots today than they did 20 years ago:

The equivalent insight for marketing teams is that testing cadence becomes a top-level SEO KPI. Academic research has shown that the outcomes of website changes are typically what is called “fat tailed” which means that returns are not driven so much by discovering lots of small wins, but rather by big winners that are hard to predict. In this environment, the same research shows that the effect is even stronger - the incentive to run more tests (even at the expense of pre-filtering them, and at the expense at the margin of statistical power and confidence) is even stronger.

What that means in practice is that SEO teams should be:

- Not trying too hard to predict which test hypotheses are “good” and rather focusing on iterating through the tests as fast as possible

- Explicitly seeking large winning outliers - ending experiments that are marginal early (and defaulting to deploy where appropriate)

- Using test data primarily to spot damaging changes and detect the big winners

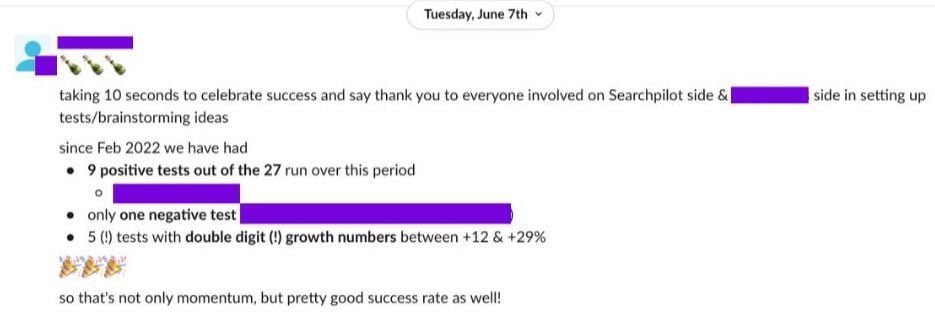

This is how we are working with our customers. This screenshot from Slack shows one customer celebrating scoring 5 winning SEO tests with double-digit % increases in just 4 months:

They did this by running an incredible 27 tests in this time!

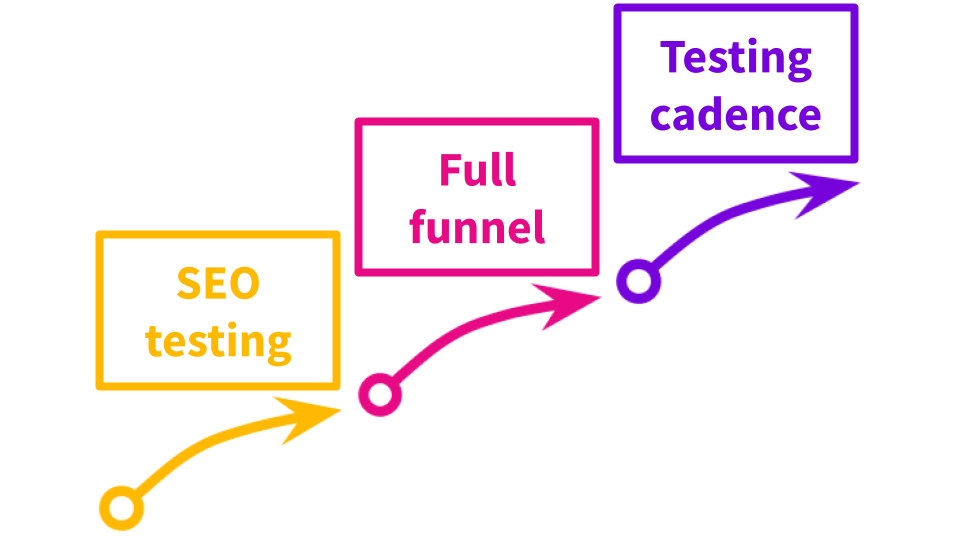

Putting all this together, what we see is that a focus on testing cadence is a long way up the SEO maturity curve, which goes something like “capable of SEO testing” → “ties SEO testing to revenue and business results” [see: full funnel testing] → “focuses on testing cadence as a KPI”:

Putting it all together

So. What should you do with this information?

If you’re won over by my argument that this is the future of SEO strategy:

Then here’s a few things you can do:

- Share this blog post with your team.

- Sign up for our case studies by email.

- Invite me to speak to your team - if you have an in-house team of 3 or more, and a website suitable for SEO testing, I’ll give a free presentation on this topic (or lessons learned from 100s of SEO tests).

- If you are ready for the next step, get a demo of SearchPilot in action to see how you can add power, precision and pace to your SEO testing.

See me make this argument in presentation form

I recorded a video of me running through the whole presentation if you prefer to listen and watch: