Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

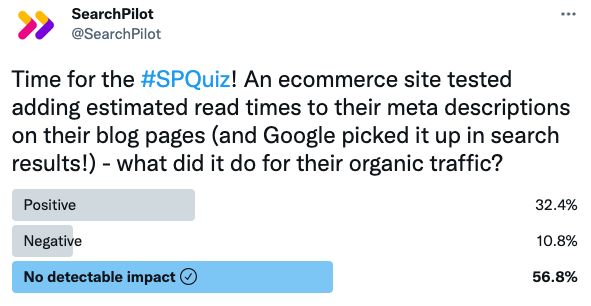

In this week’s #SPQuiz, we asked our followers what they thought happened when we added the ‘Estimated read time’ of some blog post pages to their meta descriptions. We let them know that in this instance, Google did display the change in the search results. Here’s what they thought:

Most of our followers thought this change would have no detectable impact on organic traffic and nearly a third of our followers thought this change would be positive.

This time, the majority was right! Even though Google displayed it in the search results, adding the ‘Estimated read time’ to the meta descriptions did not have a statistically significant impact on organic traffic.

You can read the full case study below.

The Case Study

A strong SEO test must target established ranking factors, organic click-through-rates (independent of ranking changes), or a combination of those two things. When we target click-through-rates (CTRs), the most common levers are meta descriptions, title tags, or rich results from structured markup; things like FAQ, breadcrumb, or product markup.

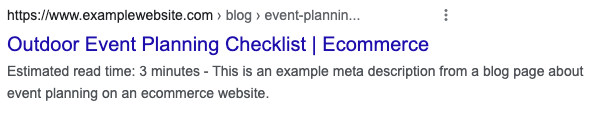

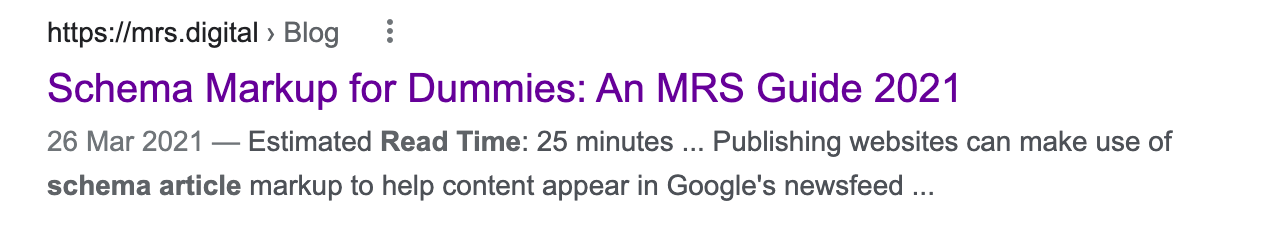

Earlier this year, an ecommerce customer noticed that some blog post pages were getting what looked like an ‘Estimated read time’ snippet in the Search Engine Results Page (SERP):

However, when we looked more closely, we found that on this page and others with similar snippets, the ‘Estimated read time’ time text was not coming from structured markup. Instead, Google was scraping that content from the page into the meta descriptions – it was not in the raw HTML source code.

Many of our customer’s blog pages already had the ‘Estimated read time’ on the page, and they wanted to test if having this content in the SERP would benefit their organic traffic. Google wasn’t scraping this content into their meta descriptions, and since it didn’t seem to come from structured markup, we decided to test adding it to the front of their meta descriptions.

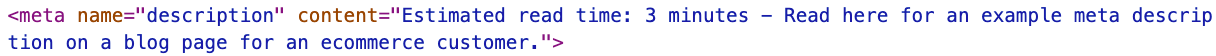

Here’s what the change looked like in the raw HTML source code:

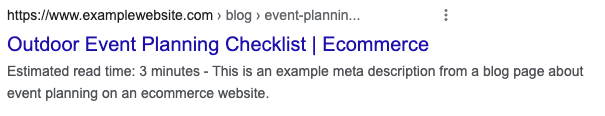

During this test, Google respected the change, and we successfully got it to show up in the SERP as well.

Here’s an example:

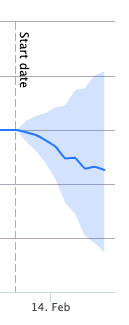

This was the impact to organic traffic:

Even though we were able to get the ‘Estimated read time’ snippet to show up in the SERP, this change, unfortunately, had no detectable impact on organic traffic.

We felt that Google scraping this content into other websites meta descriptions (rather than the website owners putting the content in the meta descriptions themselves) was an indication it saw this content as valuable to search users, and that the fact Google chose to respect the update to our meta descriptions further supported this theory. However, the same competitors we saw who had the snippet in the SERP earlier this year don’t have it anymore, so it might have just been something Google was testing out.

Although we weren’t able to make any gains in organic traffic here, it was still a good example of how SEO testing can help businesses be more agile in adapting to the constant updates Google makes to its algorithm. It wasn’t the case here, but we often find that agility allows our customers to get a valuable edge over their competitors. That being said, it also goes to show that it doesn’t always pay off to simply chase whatever you see your customers doing – a competitor getting a SERP feature or using a specific title tag format doesn’t necessarily mean it’s good for their performance.

To receive more insights from our testing, sign up for our case study mailing list, and please feel free to get in touch if you want to learn more about this test or our split-testing platform.

How our SEO split tests work

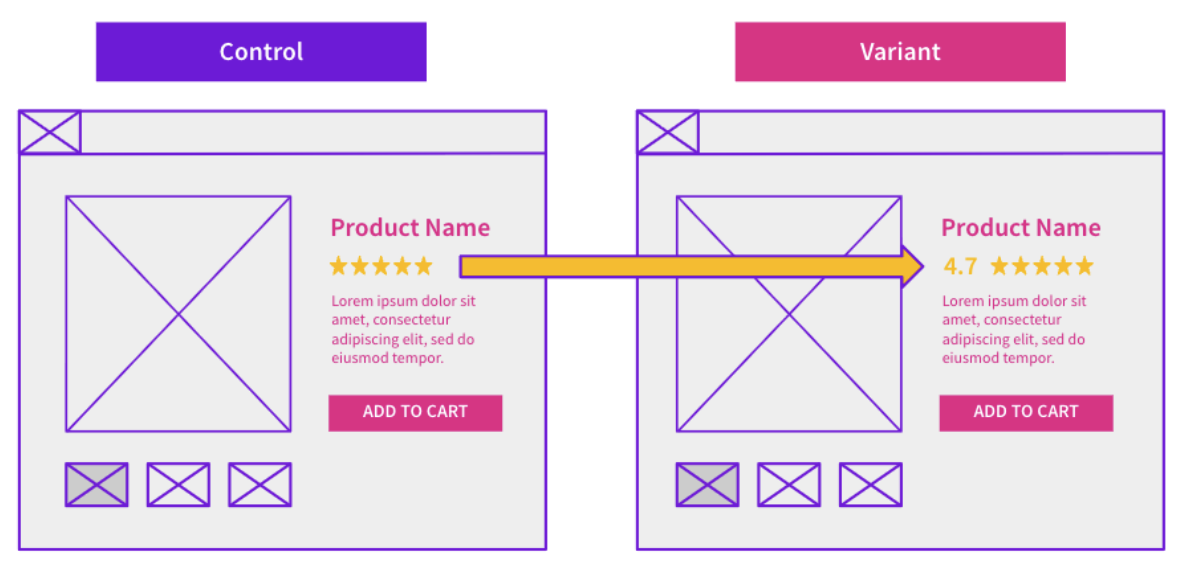

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.