You can often learn just as much from your losing SEO tests than from your winning tests.

This might sound contradictory since most people start using SEO split-testing software, like SearchPilot, to find more “winning” opportunities for organic growth. However, there’s additional value in avoiding all of the near-misses that you may have rolled out if you hadn’t tested it first.

In this post, we share the top 4 things you can learn from negative SEO tests and a useful framework to help with deciding on the confidence level for each of your tests.

What’s a losing SEO test?

Most SEO professionals would say a winning test is anything that shows statistical significance as increasing your organic traffic from Google, and a losing test would be the opposite.

But a negative result after completing SEO split testing can prevent you from harming your website. Let’s say a marketing leader is thinking about making a change to their website. They test this change with SearchPilot, learn the change would hurt their SEO, and decide not to deploy the change. This is a huge win!

SEO teams can benefit from losing tests as a learning opportunity; something that many product teams are already familiar with. Stephen Pavlovich of Conversion.com recently shared this story from Amazon.com.

“Too many organizations only talk about winning experiments, rather than learning from the losers. This leads to poor practice as losing experiments can often outweigh the value of the winning ones. Take Amazon’s disastrous Fire smartphone, which failed to sell and cost the company $170 million. Much of the technology was repackaged into the Amazon Echo smart speaker, creating an incredibly successful product and channel for the company.”

4 key things you can learn from losing SEO tests

Marketing leaders invest in SEO split-testing software, like SearchPilot, to grow their traffic and get winning tests. But, if you are a market leader in your category, one of the biggest values you can get from testing is not implementing your planned changes that gave you negative SEO outcomes. Testing is an insurance policy that safeguards against hurting your rankings.

“Sometimes our customers’ biggest wins come from never deploying losers rather than from only deploying winners.” Will Critchlow - SearchPilot CEO

1. Running tests compounds over time

Just like money compounds over time, the more SEO tests you run, the more your learning compounds over time. This means you’ll have winners and losers, but the real goal is to make sure you are never losing money or rankings by rolling out a losing test.

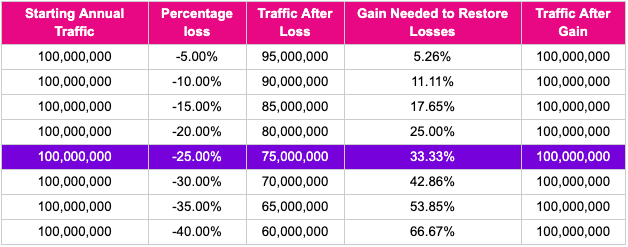

If you rolled out a change that caused a drop of 25% in organic traffic, you actually need to work towards at least 33% traffic gain to get back to where you were before the change.

The punchline is that not investing in SEO split-testing could be ironically the most expensive thing that you don’t do. It’s not free to ignore SEO split-testing.

2. Test often

With the customers that we see getting the best results from testing, there’s a very strong correlation between the number of tests that they run and the number of winners that they’ll get.

Will covered the advantages of focusing on your SEO testing cadence in his blog post about Moneyball as the future of SEO and the SEO maturity curve:

There’s a tendency to focus on getting the perfect idea or the perfect test hypothesis. Instead, it’s better to follow a scientific framework and process for designing SEO tests that allow you to create good hypotheses and run more tests, which means continuous learning since it is difficult to predict what Google will consider a good website change.

We also see different results for different industries. We even have a client running tests across their multiple website locations (UK, US, Spain, and Germany). The result is what works on the Spanish site doesn’t always work on the German site, and so forth.

3. Build a culture of continuous improvement

The goal of continuous testing is to reduce the chance of rolling out near-misses, or website changes that actively hurt your SEO. To do this, we recommend a few mindset shifts for both SEO professionals as well as marketing leaders:

- Letting go of perfectionism

- Creating an environment where it’s okay to fail or get it wrong

- Being willing to try new experiments

- Learning from hypotheses and learning how to improve setting your hypotheses in the future

- Iterating on tests

- Identifying if your learnings can be used in other areas of the company

- Sharing the learnings internally across the company, which will also help increase buy-in for your process

The real value that you can provide is a culture where iterative testing and improvements are valued. You need to cultivate an environment where it is safe for your team to try new things, iterate, and fail as long as you learn from those mistakes.

4. Avoid the statistical significance trap

As we like to say at SearchPilot, we’re doing business, not science. While you absolutely need a scientific approach to testing, there is a real danger of letting statistical significance become a barrier to making changes to your website.

Most of your experiments are going to be negative or inconclusive. Some tests reveal there are no detectable differences in your results, or the results are trending one way or the other, but it’s not statistically significant.

For instance, it’s easy to keep turning off tests because they show statistical significance of 85% or 90%.

But that’s a mistake.

Your team will benefit from the volume of tests run, getting through as many tests as you can, and then looking at the number of negative test results rather than the percentage of statistical significance.

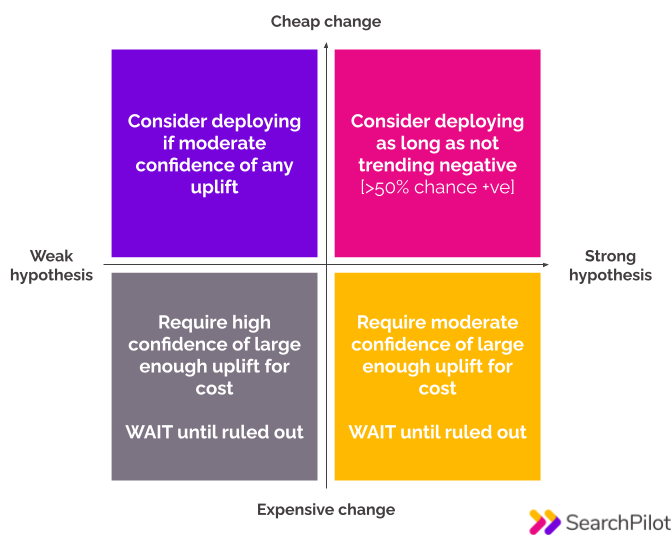

If you’re a startup or a mid-level company, then your goal should be to take more chances on tests that get 70-80% significance. It can be useful to plot your tests on this grid to determine what level of confidence you’re going to accept before the tests begin. If the hypothesis is very strong, it makes sense to have a lower threshold for success than if it’s a high-risk or expensive change.

Take a more scientific approach to SEO split-testing with SearchPilot

In summary, you can often learn just as much if not more from your losing SEO tests than your winners.

The key is to have a scientific framework and a well-defined process so you can roll out more tests and build a team culture of continuous learning.

Ready to take a more scientific approach to SEO split-testing? Request a free demo of SearchPilot.