Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

Title tags remain one of the most influential SEO elements, shaping how pages are interpreted by search engines and how listings appear in the SERPs. Even small changes to wording, punctuation, or formatting can affect click-through rates, title rewrites, and perceived relevance.

In this week’s case study, we examine whether a subtle change in how brand names are written in product page title tags has any measurable impact on search performance.

The Case Study

Most e-commerce sites follow a standardised approach to brand inclusion in title tags: product information first, brand last, separated by a pipe or hyphen. This format is widely used and generally considered best practice, largely because it’s consistent and easy to scale.

But it raises an interesting question.

If title tags are meant to be read by humans as well as parsed by search engines, does replacing separators with natural language make any difference? And more specifically, does it change how users respond in the SERPs?

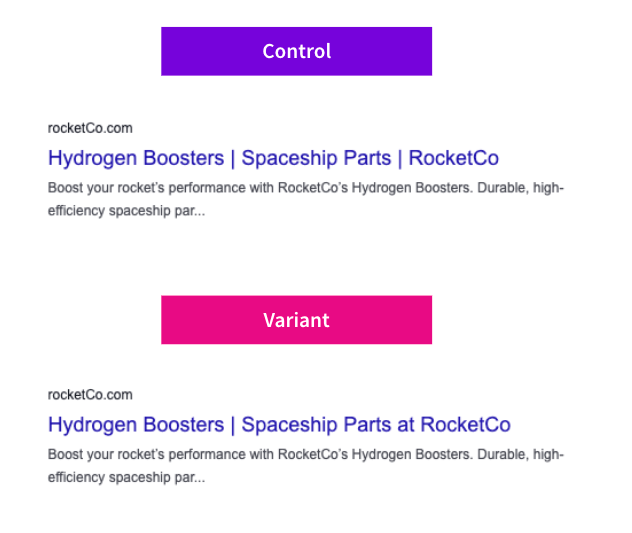

What Was Changed

To explore this, we tested a small but intentional change in how a well-known brand was included in their product detail page title tags.

There were a few reasons this felt worth exploring.

First, Google is known to rewrite title tags when it thinks they’re unclear, overly templated, or not particularly helpful to users. A more natural-sounding title might reduce the likelihood of rewrites and keep the brand name consistently visible in the SERPs.

Second, brand trust matters - particularly on product pages. For well-known brands, seeing the brand name clearly and naturally presented could reinforce credibility and encourage clicks, even if rankings don’t change.

And finally, this is exactly the kind of subtle SEO tweak that often sounds sensible in theory, but doesn’t always behave that way in practice.

Results

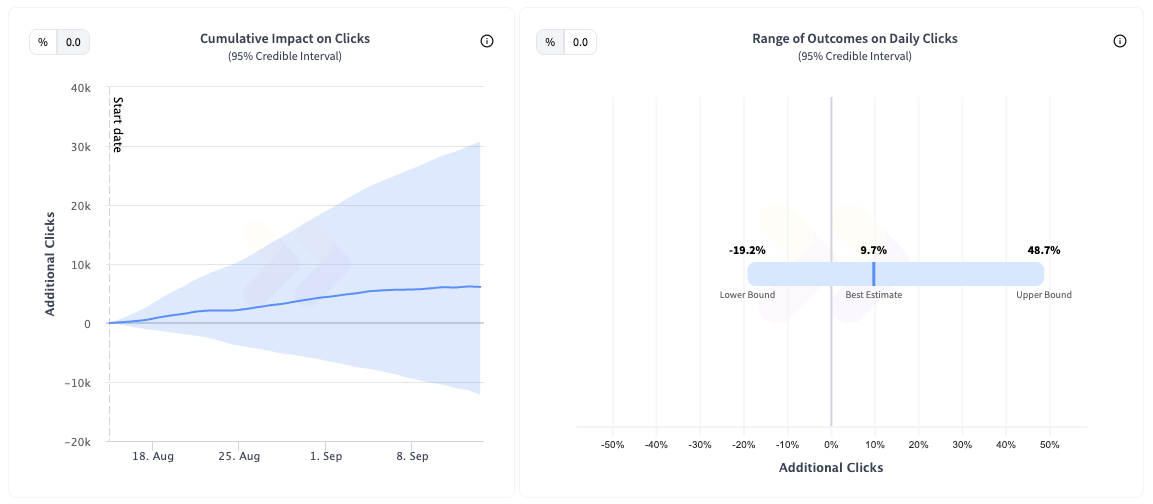

The result was inconclusive. Google picked up the change and displayed the variant titles in the SERPs, but organic traffic didn’t move in a statistically meaningful way.

While inconclusive results aren’t always exciting, they’re often revealing. This test suggests that minor wording tweaks in title tags don’t automatically translate into higher organic traffic, especially for established brands.

It’s also a useful reminder that readability improvements alone don’t guarantee performance gains. Google doesn’t reward ‘more natural’ wording unless it materially improves relevance or user engagement.

As always, we recommend testing changes like this in your own context. What feels like a sensible improvement doesn’t always translate into measurable gains, particularly on mature sites. SEO is full of best practices - but only experiments tell you which ones actually make a difference.

To receive more insights from our testing, sign up for our case study mailing list, and please feel free to get in touch if you want to learn more about this test or our split testing platform more generally.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.